Can Machines Reason? Unveiling the Intricacies of LLM Reasoning Approaches

Explore the different LLM reasoning approaches that are shaping industries. Understand how these models analyze, learn, and solve complex problems, providing essential insights for AI enthusiasts and professionals.

The large language models (LLM) like ChatGPT and Gemini are rapidly transforming industries by automating tasks and decision-making processes. They are the driving force behind the future of how we talk to technology. Being aware of the different approaches to LLM reasoning helps us to see how LLMs analyze problems and generate solutions considering the training. In this piece, the various reasoning techniques adopted by LLMs are explored in depth, giving a clearer picture of the thinking and working patterns of these powerful machines. Whether you are a developer, a student, or a fan of artificial intelligence, this article will help you to grasp how LLMs are developed, which in turn will enable you to better understand them and their psychology.

What are Large Language Models (LLMs), and how are they impacting various industries and tasks by mimicking human thought processes?

Large Language Models (LLMs) are a popular AI type that understands and generates text like natural human conversations. Trained on large corpora of books, articles, and websites, LLMs are skilled in spotting intricate structures and sophisticated nuances in language. This enables them to deal with context, return answers, and respond to questions with an impeccable sense of natural language.

LLMs not only learn languages faster but also can complete tasks that require human intelligence among which are writing essays, making poetry, generating reports, summarizing texts for specific purposes, and producing codes for different software. You can conduct purposeful discussions, find solutions to challenging inquiries, and receive detailed explanations.

LLMs can be utilized in multiple ways presently. Chatbots powered by LLMs are replacing many traditional customer services. They can promptly address customer queries and tailor their service to meet the fundamental personality of every customer. It can be achieved by analyzing customer interaction history. The LLMs are also revolutionizing the education sector in such a way that they can understand the needs of students and learning patterns to offer customized learning paths.

Moreover, LLMs can swiftly handle and scrutinize large sets of data, which makes them a vital tool in studies, including legal texts, medical records, and scientific papers.

As technology progresses, more and more uses for LLMs are being discovered, making it possible to improve the innovations and operations of various businesses. Their ability to adapt to new situations and learn from errors ensures that LLMs not only improve the existing systems but also inherently improve their performance, reliability, and accuracy.

Large Language Model (LLM) Approaches

The importance of reasoning approaches in Large Language Models (LLMs) is clear: creatively, they greatly enable the systems of AI that relate and comprehend the way that is like human beings. LLMs are not only for understanding and generating text but they are the core part of developing AI that thinks, learns, and takes actions on its own. These models make use of several reasoning techniques to handle intricate projects in several disciplines such as the analysis of law and medical data and the enhancement of customer service and content generation.

LLMs can understand the context, carry out inferences, solve problems, and even reason counterfactually, allowing them to offer responses of higher precision and include the context too. This skill gives room to AI that is more natural, purposeful, and stable. LLMs resemble human reasoning and this way the distance between machines and humans is shortened and the barrier of communication is cut down. Consequently, the desire to have a more in-depth look at LLM reasoning methods is not only an intellectual interest; it's a means of exploiting AI's potential in decision-making, novel solutions, and the transformation of industries.

For ease of reading we have divided the LLM reasoning approaches into subcategories based on the underlying reasoning paradigm or methodology used by the model. Here's a breakdown of the categories:

- Symbolic Reasoning

- Statistical Reasoning

- Neural Network Approaches

- Analogical Reasoning

- Inductive Reasoning

- Deductive Reasoning

- Abductive Reasoning

- Counterfactual Reasoning

- Causal Reasoning

- Commonsense Reasoning

1. Symbolic Reasoning for LLMs

Symbolic reasoning is one of the fields of artificial intelligence that employs symbols and explicit rules for the purpose of modeling and solving problems. This approach contrasts with artificial intelligence techniques employing learning from data as the main element. In symbolic reasoning, symbols stand for objects, concepts, or conditions, and rules define the way these symbols interact with each other. The approach is a logical and mathematical one, providing a solid grounding for dealing with decisions.

Comparison with Statistical Representations

Symbolic thinking is a manifestation of artificial intelligence, which uses symbols and explicit rules to address problems. In contrast with other AI methods that learn from large amounts of data, symbolic reasoning relies on the representation of things, ideas, or conditions by symbols and the rules that explain how these symbols interact. For instance, the neural network can identify diseases by looking at many medical images, but the symbolic system uses clear rules that are based on medical knowledge to make a diagnosis from textual symptoms. This approach is based on reasoning and mathematics, and therefore it is easy to follow how the decisions are made. This method is especially useful in situations when it is essential to keep the decision-making process transparent and understandable.

Advantages of Symbolic Reasoning

Symbolic reasoning is advantageous in terms of its transparency and explainability. Because every action is taken according to the prescribed rules it is clear why a particular decision was made. This is especially true in the case of law and medicine, where knowing the reasoning behind the decisions is sometimes as important as the decisions themselves.

How Does Symbolic Reasoning Work in LLMs?

Key Components of Symbolic Reasoning Systems in LLMs

In the case of LLMs, symbolic reasoning is composed of components like knowledge bases that contain information in the form of facts and rules, and rule engines, which are responsible for applying logic to conclusions from the facts given. These systems are created to imitate human logic models, whereby the LLMs can make sense of complex principles as well as structured knowledge.

Representation of Knowledge in LLMs

Knowledge in symbolic systems is commonly represented in the form of logical statements, semantic networks or ontologies. As an illustration, the legal LLM rule could be something like, "If a person is under 18, he/she is considered as a minor," and will be used by the system to check age in cases requiring verification.

Types of Rules Used in Symbolic Reasoning

Symbolic reasoning in LLMs can employ various types of rules:

- Production rules: If-then structures that trigger specific actions.

- Inference rules: Used to derive new facts from known facts.

These rules enable LLMs to execute tasks ranging from simple categorization to complex problem-solving by methodically applying logic.

Examples of Symbolic Reasoning in LLM Tasks

An example of symbolic reasoning's application in LLMs can be seen in grammar correction tools. These systems use rules about language structure to identify errors and suggest corrections, effectively enhancing writing assistance technologies.

Advantages and Limitations of Symbolic Reasoning in LLMs

Advantages of Symbolic Reasoning

Symbolic reasoning is highly valued for its interpretability and the domain specificity that it provides. The fact that symbolic systems follow a clear set of rules makes them very suitable for tasks where the reasoning processes need to be verifiable, reproducible, and understandable. Clarity is indispensable, especially in sectors like finance or law, where participants depend on understanding and trusting the methodology behind AI making decisions. Secondly, in the areas with clearly defined rules and a logical structure, symbolic reasoning is predominantly strong due to high accuracy and consistent solutions.

Limitations of Symbolic Reasoning

- Knowledge acquisition bottleneck: Designing symbolic reasoning rules to be comprehensive and specific necessitates extensive labor and a high level of domain expertise.

- Scalability Issues: Symbolic systems are not as capable of evolving and scaling with emerging data as their machine-learning counterparts, which learn constantly from the new data provided.

Possible Solutions and Continuing Research

Researchers investigate hybrid models that are capable of the robustness of symbolic reasoning as well as the adaptability of machine learning. These blended approaches try to use the strengths of both systems, to increase scalability and flexibility as well as to maintain the transparency provided by symbolic methods.

Real-Life Uses of Symbolic Reasoning in LLMs

Symbolic reasoning has been particularly useful in domains where precision and justification of decision-making are required at high levels.

Legal Document Analysis

Within legal settings, LLMs with symbolic reasoning skills can find relevant precedents as well as inconsistencies in arguments by studying and interpreting complex legal texts, thus aiding lawyers. This feature is critical in reducing the number of cases to be reviewed and ensuring compliance with all applicable laws.

Medical Diagnosis Support

In addition, symbolic reasoning is applied in medical diagnosis systems. With the help of predefined medical knowledge and rules the LLM can help medical professionals to diagnose a disease based on symptoms and test results and offer additional views and display treatment guidelines.

Potential Future Applications

Advancing technology is likely to lead to LLMs extending symbolic reasoning into sophisticated areas like automated driving and personalized education where the priorities are security and customization.

Conclusion

Symbolic reasoning continues to be a critical component of LLM construction, which makes the systems not only intelligent but also comprehensible and trustworthy. Its capability of working together with other reasoning techniques allows it to deliver comprehensive and advanced solutions across a wide range of industries. As a result, the development of symbolic reasoning will be crucial for the progress of the AI which will result in next-generation LLMs being more accurate and adaptable.

2. Statistical Approach in LLM Reasoning

Statistical reasoning is the process by which conclusions are drawn from data using probabilities and statistical methods. This way is indispensable for LLMs, which is the next step in predicting and generating language based on the probability of patterns. It rests on principles like probability distributions and Bayes' theorem, assembling which they can deal with uncertainties and adjust their reactions accordingly.

Key Statistical Concepts Relevant to LLMs

The key to probabilistic reasoning in LLMs is the concept of the probability distribution that represents possible results of the language and the Bayes theorem that tells models to update their beliefs after seeing new evidence. Through them, LLMs are capable of improving their language processing with time.

Advantages of Statistical Reasoning

Statistical reasoning is remarkable for its capability of dealing with big data and managing the innate uncertainty of language. It is this versatility that makes it especially suitable in situations where quick adjustment and precision are required, including language translation and auto-generated content.

How Does Statistical Reasoning Work in LLMs?

Key Statistical Methods Used in LLMs

LLMs rely on various statistical approaches such as Bayesian inference and hidden Markov models which facilitate predictions about texts and help in understanding sequences of data. It is because of these techniques that the LLMs can go through the maze of the human language.

Role of Statistical Techniques in Specific LLM Tasks

Statistical techniques are key in performing tasks like sentiment analysis, where LLMs determine the emotional nature of the text, and spam detection, where they learn the features and subsequently predict the probability of a given email being spam.

Examples of Statistical Reasoning in LLMs

A common application of statistical reasoning is in spam detection systems, where LLMs are used to determine the likelihood of certain phrases implying spam. These days, AI technologies employ statistical methods for more dynamic functions, including modifying responses in real-time during interactions with customers.

Advantages and Limitations of Statistical Reasoning in LLMs

Advantages

Statistical reasoning provides several benefits in the realm of LLMs:

- Data-Driven Learning: It means learning directly from large datasets and constantly modifying their language models based on the incoming data.

- Scalability: Unlike traditional algorithms, which become less efficient as the addition of data increases, it scales effectively, making it the ideal solution for applications that accumulate infinite streams of text.

- Handling Uncertainty: Using statistical reasoning, LLMs can handle and integrate uncertainty, thus being more adaptive to varied linguistic situations.

Limitations

Despite its strengths, statistical reasoning also has notable limitations:

- Bias in Training Data: When biased data is used for training, the LLMs' outputs will also show the biases that could create unbalanced and unfair decisions.

- Causal Inference Challenges: Statistical methods seldom amount to more than pointing out correlations in data, which does not necessarily mean causality is implied.

Potential Solutions and Ongoing Research

To solve this problem, the newest research aims to design better statistics and pieces of training. They include initiatives to de-bias data and strengthen models, which increases the comprehension of both causal relations and the accuracy and fairness in LLMs.

Real-World Applications of Statistical Reasoning in LLMs

Statistical reasoning is behind many of the practical uses of LLMs today, particularly in fields that require the processing of large datasets and the management of uncertainty:

Machine Translation

Machine translation employs statistical reasoning to estimate the probabilities of different translations, thus improving the accuracy and fluency of translated text by taking contextual factors into account.

Personalized Recommendations

The LLMs implement statistical inference to suggest personalized content. For example, streaming services where user behaviors and viewing histories are analyzed to predict future interests.

Bioinformatics

A new line of statistical reasoning applications is bioinformatics which helps analyze complicated genetic data. This is where LLM will be able to predict genetic mutations and the implications they have on certain diseases; this will assist researchers and healthcare professionals in developing more targeted therapies.

Conclusion

Statistical reasoning as a capability of LLMs is highly beneficial because it helps to solve complicated and information-intensive tasks. Through the combination of other AI reasoning strategies, LLMs can provide complex and personalized solutions for different industries in the world. With time, the combination of statistical techniques is predicted to continue evolving AI advancements, thus increasing the capacity of LLMs in dealing with natural language processing difficulties.

3. Neural Network Approaches in LLM Reasoning

Neural Network Approaches significantly enhance the reasoning capabilities of Large Language Models (LLMs), enabling them to process and interpret complex language data. These technologies empower LLMs to perform tasks that mimic human cognitive functions, making them integral in various AI applications.

Understanding Neural Networks

Defining Artificial Neural Networks

Artificial neural networks consist of interconnected layers of neurons, each using activation functions to process inputs and produce outputs based on learned data. These networks learn through a process called backpropagation, where they adjust their internal parameters to minimize the difference between their prediction and the actual data.

Advantages of Neural Networks for Reasoning

Neural networks excel in identifying complex patterns in large datasets, making them particularly effective for reasoning tasks. Their data-driven approach allows them to adapt and learn from new information continuously, enhancing their decision-making accuracy over time.

How Neural Networks Enable Reasoning in LLMs

Key Neural Network Architectures

Several neural network architectures are pivotal in LLM reasoning:

- Recurrent Neural Networks (RNNs) are crucial for processing sequential data, making them ideal for tasks that require understanding the flow of language, such as in narrative texts or conversations.

- Transformers utilize attention mechanisms to manage relationships and dependencies in data, significantly improving reasoning over longer texts or more complex datasets.

Role of Transformers in Understanding Context

Transformers have been revolutionary in LLMs due to their ability to focus on different parts of the input data, enhancing the model's understanding of contextual relationships within texts. This capability is essential for accurate reasoning and the generation of relevant responses.

Key Techniques for Enhancing Reasoning in Neural LLMs

- Transfer Learning and Pre-training

Transfer learning involves pre-training neural networks on extensive datasets to develop a foundational understanding of language, which is then fine-tuned for specific tasks. This technique significantly enhances the reasoning capabilities of LLMs by providing them with a robust base of knowledge before specializing. - Chain-of-Thought Prompting

Chain-of-Thought prompting is a novel approach where LLMs are guided through logical reasoning steps before arriving at a conclusion. This technique helps in breaking down complex problems into manageable parts, making the reasoning process more transparent and traceable.

Advantages and Limitations of Neural Network Approaches

Strengths of Neural Networks

Neural networks are adept at learning complex relationships within large amounts of data, making them invaluable for tasks that require nuanced understanding and decision-making. Their ability to adapt to new, unseen data after initial training is a significant advantage in dynamic environments.

Challenges and Research Opportunities

Despite their strengths, neural networks often function as "black boxes," with limited visibility into how decisions are made. This opacity can be a challenge in applications requiring clear audit trails or explainability. Additionally, neural networks can inherit and amplify biases present in training data, posing ethical concerns. Ongoing research is focused on improving the interpretability of these models and developing methods to mitigate bias.

Real-World Applications of Neural Network Reasoning in LLMs

Innovations in Healthcare

In the healthcare sector, neural network reasoning facilitates the development of predictive models for patient diagnosis and treatment outcomes, leveraging vast datasets to improve accuracy.

Innovations in Customer Service

Another significant application is in customer service, where neural networks analyze customer interactions to provide personalized responses and support.

Potential Future Applications

Looking ahead, neural network reasoning is set to transform industries such as finance, where it can predict market trends and customer behavior with greater precision. Another promising area is autonomous systems, where advanced reasoning capabilities enable better decision-making in unpredictable environments.

Conclusion

Neural network approaches are fundamentally changing the way LLMs reason, providing the groundwork for more intelligent, adaptable, and efficient AI systems. As these technologies continue to evolve, they promise to enhance the capabilities of LLMs across a broad spectrum of industries, making them more responsive and insightful in their interactions.

4. Analogical Reasoning Approaches in Large Language Models (LLMs)

Analogical reasoning is a sophisticated method used by Large Language Models (LLMs) to solve problems by identifying similarities between different situations or concepts. This approach enables LLMs to draw on past experiences to address new challenges, making it a powerful tool for enhancing AI's cognitive abilities.

What is Analogical Reasoning?

Core Principles of Analogical Reasoning

Analogical reasoning in LLMs involves transferring knowledge from known to new contexts based on identified similarities. This method is crucial for tasks where direct answers may not be available but can be inferred through parallels with previously encountered scenarios.

Types of Analogies in LLMs

While the types of analogies like proportional and metaphorical are diverse, the focus here remains on their broad application within LLMs, such as using metaphorical analogies to explain complex scientific concepts through simpler, everyday terms.

Advantages of Analogical Reasoning

The primary benefits of analogical reasoning include its ability to solve new problems based on past knowledge and to handle incomplete information effectively. This adaptability makes it invaluable in dynamic environments where rigid algorithms might fail.

How Do LLMs Utilize Analogical Reasoning?

Approaches to Implementing Analogical Reasoning

LLMs employ strategies such as case-based reasoning, where the system retrieves and adapts solutions from similar past cases to solve new problems. For example, in legal applications, LLMs might draw from previous case law to inform decisions on current cases.

Supporting Analogical Reasoning with Knowledge Bases

Semantic representations and knowledge bases play a crucial role in supporting analogical reasoning by providing a structured memory of relationships and contexts that LLMs can draw upon to make connections between different concepts.

Real-World Examples

In practical applications, LLMs use analogical reasoning for tasks like creative content generation, where they generate new text formats based on successful structures from existing content.

Challenges and Opportunities of Analogical Reasoning in LLMs

Challenges in Implementing Analogical Reasoning

One of the main challenges of analogical reasoning is identifying relevant analogies, especially when dealing with subtle semantic differences that can significantly impact the appropriateness of the analogical transfer. Additionally, maintaining the accuracy of these analogies across diverse domains poses a significant challenge.

Ongoing Research Initiative

Recent research focuses on enhancing the retrieval mechanisms in case-based reasoning systems to improve how LLMs identify and utilize relevant past cases, ensuring more accurate analogical reasoning.

Recent Technological Advancement

A notable advancement in this field is the development of more sophisticated semantic networks that better capture the nuances of complex relationships between concepts, aiding LLMs in drawing more precise analogies.

Real-World Applications of Analogical Reasoning in LLMs

Analogical reasoning is particularly valuable in fields where knowledge transfer and creative problem-solving are crucial. For instance:

Legal Research Support

In legal research, LLMs apply analogical reasoning to compare current cases with historical ones, helping lawyers find precedents and interpret laws more effectively. LexisNexis and WestLaw are using LLMs for Legal support.

Creative Marketing Analogies

In marketing, LLMs generate compelling content by drawing analogies that resonate with consumers, making complex products more relatable and understandable.

Future Outlook of Analogical Reasoning in LLMs

Near-Term Practical Application

In the near term, analogical reasoning is set to enhance AI-driven customer support systems, enabling them to offer solutions based on similar past interactions, thus improving the customer experience with more personalized and accurate responses.

Long-Term Theoretical Possibilities

Looking further ahead, there is potential for analogical reasoning to revolutionize fields like educational technology, where LLMs could provide personalized learning experiences by analogically relating new teaching material to a student’s existing knowledge base.

Conclusion

Analogical reasoning enriches the cognitive capabilities of LLMs, allowing them to leverage past experiences and solve problems creatively. As this approach continues to evolve, it complements other reasoning methods within LLMs, promising to broaden the impact and applicability of AI across various industries. The future of analogical reasoning in LLMs holds significant potential to make AI interactions more natural and effective, driving innovation in problem-solving and decision-making processes.

5. Inductive Reasoning Approaches in Large Language Models (LLMs)

Large Language Models (LLMs) are pivotal in advancing how machines understand and interact with human language. Inductive reasoning, a method for deriving general rules from specific examples, is essential for enabling LLMs to learn from data and apply these learnings to new situations.

What is Inductive Reasoning?

Core Principles of Inductive Reasoning

Inductive reason in LLMs means the ability to generalize from the noticed phenomena to generate much more comprehensive generalizations. This technique is down-on-ground to models that are trained on textual data for predicting future occurrences and classifying data.

Key Concepts of Inductive Reasoning

1. Observation and Pattern Recognition

In the case of LLMs, observation refers to collecting and analyzing a massive data set containing texts. LLMs discover rules like linguistic structures, word relationships, or similarities across texts. For instance, an LLM trained on customer service data will recognize patterns of problems the customers might face and the corresponding effective reactions, hence learning the most efficient means of interaction.

2. Hypothesis Generation

After examining the trends in data, an LLM can formulate hypotheses. These are preliminary findings statements about language or behavior correlations in that data. For example, from analyzing the online comments received, the LLM might think that positive reviews include words like "love" and "perfect" while negative reviews frequently include "disappointed" and "poor."

3. Inductive Bias

Inductive biases of LLMs are the presumptions or preconceptions built into the models by their mode of design or training data. Such biases allow the model to think logically, as they push it to look for more probable ways of language interpretation. On the other hand, LLM may be prone to an interpretation of sentence ambiguities which aligns with the more commonly observed problems in its training set.

4. Generalization

The concept of generalization in LLMs corresponds to extending the collection of assumptions that you obtain from specific data set across the wider language or context situations. As an example, it is likely that an LLM trained to identify requests for help in emails would generalize this ability to spot the same requests on live chat or social media platforms, even though the original words may be slightly different.

5. Strength of the Conclusion

The strength of an LLM’s conclusions from inductive reasoning depends on several factors:

- Sample Size: The more comprehensive and eclectic training data, the better is the possibility of making reliable generalizations.

- Representativeness: The data must represent the true breadth of language usage among different demographics and discourse modes.

- Alternative Explanations: LLMs must perceive multiple meanings of data to prevent themselves arriving at the overly simplistic conclusions. Some words, just like "crash," can be understood differently if used in financial rather than in technological contexts.

6. Testing and Refinement

Learning and recalling are the keys in LLMs as the models are being updated with new data. A flaw in output gives impetus to review, criticize, and fine-tune the model hypotheses. For instance, if an LLM tends to overemphasize sentiment in particular contexts, the incorporation of additional training data or parameters’ adjustment might be required to correct these biases.

Advantages of Inductive Reasoning

These inferences can be of several kinds, but one of the most important ones is its ability to recognize trends and dataset changes, which are of great importance for dynamic areas where the inputs of data suffer frequent changes.

How Do LLMs Utilize Inductive Reasoning?

Implementing Inductive Reasoning in LLMs

LLMs employ various techniques to manifest inductive reasoning:

- Pattern Recognition: Algorithms that can easily recognize repeated patterns or components in the data are especially useful for such tasks as, for example, sentiment analysis or topic classification.

- Generalization: Enables LLMs to recognizing and applying the learned patterns even to the new data, e.g. prediction of the stock market trajectory based on the financial history data.

Role of Inductive Bias

Inductive bias incorporated into the LLMs' learning process permits them to evidence some preference to particular solutions. Thus, it has a significant impact on the LLMs' learning path and subsequently their effectiveness in certain tasks.

Practical Examples

The task of LLMs in anomaly detection is to mark the deviant patterns that differ from the norm as abnormal data as in fraudulent transactions in banking. In text exploration, these machines recognize emerging subjects by observing changes of word usage overtime.

Challenges and Considerations for Inductive Reasoning in LLMs

Inductive reasoning in LLMs faces several challenges:

- Handling Limited Data: LLMs could fail to generalize from small or unrepresentative datasets adequately so they could give wrong conclusions.

- Avoiding Overfitting: LLM must avoid being too specialized in training data to the extent that they fail to present good performance with new, unobserved data.

Practical Example of Addressing Challenges

A practical application will be achieved through regularization and cross-validation methods to prevent overfitting thus maintaining the validity of the model to generalize well across the different data sets.

Real-World Applications of Inductive Reasoning in LLMs

Inductive reasoning supports numerous applications across various sectors:

- Financial Markets: LLMs analyze past trading data for forecasting market trends thereby enabling investors to make well-informed decisions.

- Scientific Discovery: In an area like genomics, LLMs make use of pattern recognition to detect disease markers from large data sets.

- Retail: Thanks to LLMs, consumers' buying patterns and inventory demands are predicted based on sales data.

- Transportation: In the field of logistics, LLMs optimize route planning by examining the traffic patterns along with the vehicle performance data.

Future Outlook of Inductive Reasoning in LLMs

Potential for Growth and Innovation

The future of inductive reasoning in LLMs is promising, with the potential to transform how we interact with data-rich environments:

- Near-Term Practical Application: In healthcare, LLMs could make use of patient data for personalizing treatment plans, which may lead to better outcomes by creating interventions targeted to individual health profiles.

- Long-Term Theoretical Possibilities: In the long term, inductive reasoning may allow LLMs to independently create scientific theories or business strategies by combining data provided from different data sources.

Conclusion

Inductive reasoning is a potent tool that extends the abilities of LLMs so that they can learn from data and handle new challenges. Leveraging other reasoning approaches, helps LLMs provide better predictions and insights across industries. However, as technology progresses, the role of inductive reasoning in AI development will become even more important and certainly bring more complex and intuitive AI systems.

6. Deductive Reasoning Approaches in Large Language Models (LLMs)

Deductive reasoning is a critical component in the functionality of Large Language Models (LLMs), enabling them to apply established rules to new information to reach guaranteed conclusions. This method is fundamental for tasks requiring high precision and reliability in reasoning.

What is Deductive Reasoning?

Core Principles of Deductive Reasoning

The process of deductive reasoning in LLMs is implementing logical principles and then using them to get particular results from general guidelines. The dialectical argument is employed on syllogisms and the logical inference making sure the conclusion is necessarily entailed if the premises are valid.

Key Concepts of Deductive Reasoning

- Syllogisms: Systematic arguments that are based on premises to come up with a conclusion. By way of illustration, all birds have feathers (premise), a sparrow is a bird (premise), therefore, sparrows have feathers (conclusion).

- Logical Inference: Logical act of deducing conclusions from premises. This communicates the premise-conclusion relationship in which the final sentences directly follow the opening ones.

Advantages and Limitations

The principles of deductive reasoning yield absolute conclusion that is based on the fact that the initial premises are true. Though it may encounter some hiccups in dealing with uncertainty or inexact or incomplete information which often emerges in the dynamic real world.

How Do LLMs Utilize Deductive Reasoning?

Implementing Deductive Reasoning in LLMs

LLMs employ techniques like top-down logic and chain-of-thought prompting to implement deductive reasoning:

- Top-Down Logic: Deals with complex issues by breaking them into smaller, easily arranged parts applying creational patterns. Let’s say, that when solving mathematical proofs through LLMs, a top-down approach is taken to break the complex task into smaller pieces and then solve each segment one at a time.

- Chain-of-Thought Prompting: Provides LLMs with explicit reasoning, which helps in the problem-solving process even in complex cases like word problems in mathematics or logic puzzles.

Supporting Techniques

- Logical Representations and Knowledge Bases: LLMs must deal with queries based on an extensive database that stores information in the form of logical statements for this purpose. These statements are crucial in correctly applying deductive reasoning.

Examples in Action

In the procedure of algorithmic problem-solving, LLMs apply deductive reasoning to follow a logical sequence to solve the problem. In legal document analysis, LLMs apply deductive logic to verify facts and claims provided by statutory law.

Challenges and Considerations for Deductive Reasoning in LLMs

Deductive reasoning in LLMs faces several real-world challenges:

- Validity of Initial Premises: The truthfulness of the conclusions is based on the accuracy of the initial premises. Inaccurate or faulty premises can result in wrong conclusions, even with correct logical thinking.

- Handling Incomplete Information: Deductive reasoning cannot be done without enough data to form conclusions. In real-life applications, where data may sometimes be incomplete or ambiguous, adhering to strict deductive logic is a real challenge.

Addressing the Challenges

To eliminate those problems, LLMs encompass approaches such as strong knowledge verification that prove that assertions are true and exhaustive. Moreover, the proposition of connecting deductive logic with other types of reasoning, including inductive or abductive, offers a chance of balancing the deductive logic strictness.

Real-World Applications of Deductive Reasoning in LLMs

Deductive reasoning finds applications in several crucial areas:

- Legal Research Support: LLMs utilize deductive reasoning to interpret laws and regulations, supporting legal professionals in case analysis and litigation preparation.

- Verification of Factual Claims: For journalism verification and data check, LLMs apply deductive reasoning and verify the facts from credible sources.

- Educational Assessments: In the field of educational technology, LLMs make use of deductive reasoning to score student responses in tests, especially in subjects, such as those requiring logical analysis and critical thinking.

Future Outlook of Deductive Reasoning in LLMs

Looking ahead, deductive reasoning is set to enhance its role in fields requiring precise logical operations:

- Automated Theorem Proving: Deductive reasoning is one of the important skills that machine learning models (LLMs) possess, as they can help in mathematical research by proving new theorems where each step is a logical consequence of the previously established truths.

- Scientific Reasoning: Using deductive reasoning in scientific models enables drawing hypotheses and testing theories based on the deduced logic from observed phenomena.

Conclusion

The deductive reasoning capabilities of LLMs give them the ability to execute tasks that require a concrete logical framework to be followed, which further improves the accuracy and reliability of the results. With the advancement of LLM technologies, focusing on the combination of deductive reasoning with other reasoning modes will be necessary in producing more mature AIs that can deal with various other tasks.

7. Abductive Reasoning Approaches in Large Language Models (LLMs)

Abductive reasoning stands as a pivotal method within Large Language Models (LLMs) for generating hypotheses to explain observations. This approach enables LLMs to derive the most likely explanations for data inputs, making it essential for complex decision-making processes.

What is Abductive Reasoning?

Core Principles of Abductive Reasoning

Abductive reasoning is, in essence, guessing what could be the best explanation for all the given set of observations. Differing from the deductive logic which applies the universally stable rules to arrive at a particular decision, or the inductive logic which generalizes from specific occurrences, abduction begins with an incomplete set of observations and proposes the most plausible explanation.

Comparison with Deductive and Inductive Reasoning

- Deductive Reasoning: Starts with a general statement or hypothesis and examines the possibilities to reach a logical conclusion.

- Inductive Reasoning: Involves making broad generalizations from specific observations.

- Abductive Reasoning: Best described as 'inference to the best explanation', it is used extensively when there are multiple possible explanations for a phenomenon, and the goal is to find the most likely one.

Advantages and Limitations

One distinctive strength of abductive reasoning is its power to fill in the gaps of incomplete information and develop original hypotheses. Its main constraint is that it can result in multiple explanations that could be plausible but don't have clear criteria for discrimination among them, which can confuse them.

How Do LLMs Utilize Abductive Reasoning?

Key Techniques and Implementations

- Hypothesis Generation: Abductive reasoning stands out as the main method of reasoning that the LLMs utilize when they are given fresh or partial data as input. This is particularly important in domains involving diagnostic AI where the LLM can suggest several possible medical diagnoses given the symptoms.

- Abduction Frameworks: These are structured approaches that LLMs use to systematically generate and evaluate hypotheses. For example, an LLM might use a framework that incorporates elements of logical reasoning combined with machine learning techniques to refine hypothesis accuracy.

Role of Domain Knowledge

For LLMs to perform abductive reasoning well, a model will rely on a solid base of domain knowledge which provides greater context and helps make a more accurate hypothesis. For example, in debugging technical issues if the LLM possesses proper knowledge about software designs the language model can easily pinpoint the causes of these system failures.

Examples in Action

In customer service, LLMs draw conclusions based on inductive reasoning by offering solutions based on how similar issues have been resolved in the past dialogs even if the customer issue is only partially described.

Challenges and Considerations for Abductive Reasoning in LLMs

Challenges in Implementation

Abductive reasoning in LLMs faces several challenges:

- Evaluating Plausibility: The selection of the most probable hypothesis can be challenging when several valid theories exist.

- Bias in Explanations: Through the process of hypothesis, there is a knowledge skewing possibility even though the training data or the model systems carry inherent biases.

Addressing Challenges

To overcome these obstacles, LLMs might incorporate techniques such as:

- Reinforcement Learning: Instant updating of hypothesis selections according to the feedback.

- Human-in-the-loop Systems: When human intervention is useful to refine the hypotheses generated by LLMs, it is ensured that these hypotheses are both plausible and unbiased.

Real-World Applications of Abductive Reasoning in LLMs

Abductive reasoning has practical applications across various fields:

- Medical Diagnosis Support: Healthcare professionals use LLMs to provide doctors with adequate medical diagnoses depending on symptoms and patient history, showing models' inherent capacity to handle low-quality data.

- Scientific Discovery: LLMs can make suggestions for experimental data hypotheses that may lead to new scientific findings in research.

- Environmental Science: LLMs apply abductive reasoning to make predictions of how environmental changes may affect biodiversity and through this they can provide know-how to conservationists.

Future Outlook of Abductive Reasoning in LLMs

The future of abductive reasoning in LLMs looks promising with advancements that could significantly enhance decision-making processes:

- Near-Term Practical Application: In customer service, LLMs could apply abductive reasoning to create personalized troubleshooting solutions that would be based on common problems and user-specific contexts.

- Speculative Long-Term Potential: The next-level LLMs could possibly develop auto-generated creative strategies and business plans based on consumer activity as well as a market environment with the help of statistics and markets data, suppositions of new promotional methods among consumers.

Conclusion

Abductive reasoning in LLM has proved to be a very effective method because it allows the system to generate hypotheses as well as make informed decisions based on incomplete data. With continuous advances in technology, it will most probably take commanding positions in making LLMs more adaptable, smart, and capable of resolving challenging scenarios in practical applications. The integration of abductive reasoning with other forms of reasoning will make LLMs more robust and versatile and, thus, will lead to more innovative and effective AI solutions.

8. Counterfactual Reasoning in Large Language Models (LLMs)

Large language models (LLMs) largely rely on counterfactual reasoning, a very important component that makes it possible for them to investigate alternative reality pathways and scenarios that did not happen regardless of existing conditions. This skill is critical for jobs that require strategic planning, decision-making, and causal inference as a basis for sophisticated AI applications.

Historical Context and Understanding Counterfactuals in LLMs

Foundational Theories

Conditional reasoning formed its basis both in philosophy and statistics; the very first philosophers introduced it as the ones who pondered conditional statements about what might have been. In the case of AI, these theoretical foundations have been transformed into a mechanism that allows machines to emulate possible simulations and their impact on real-world situations.

How LLMs Leverage Counterfactual Reasoning

LLMs apply counterfactual reasoning by manipulating existing data to envision alternative states. This is done using advanced techniques such as:

- Causal Inference Methods: These methods allow LLMs to infer causality from correlations, identifying how different factors would affect outcomes.

- Contrastive Analysis: This process involves contrasting the actual actions with the possible ones to facilitate comprehension of the key elements.

- Temporal Reasoning: It means to concentrate on comprehension and sequence of steps and how deviations or adjustments to certain steps might lead to either positive or negative outcomes.

- Generative Modeling: The purpose of this method is to create data that looks similar to the real but unobserved outcomes.

- Probabilistic Programming: Users can model uncertainties and build detailed probabilistic models due to this feature.

Key Example with Algorithms

Consider the scenario of analyzing marketing strategies:

- Scenario: Customer Browsing Behavior

- Counterfactual Analysis: LLMs may apply a generative modeling model, perhaps vibrating a Variational Autoencoder (VAE), and then assess various consumer preferences and demonstrate how shifts in marketing strategies may affect brand preferences or price sensitivity.

Advantages and Limitations of Counterfactual Reasoning in LLMs

Advantages

- Enhanced Decision-Making: Through the analysis of different 'what-if' situations that are obtained before the final decision, we can reach the best possible outcome.

- Improved Risk Assessment: Conducting risk assessment and risk avoidance prior to the occurrence of any of them.

- Supporting Strategic Planning: Through computer simulations, enterprises can predict various strategic results and reach rational decisions.

Limitations

- Data Limitations: The accuracy of counterfactual simulations is highly dependent on the quality and quantity of the data.

- Generalizability Issues: The challenge is to make sure that the simulations reproduce the real world's complexity.

- Realism in Scenarios: Making sure that the produced counterfactuals are plausible and match the real situation.

Potential Solutions

The advancements in data collection and analysis techniques are helping to overcome these limitations. The research is still going on to make the simulation results more realistic and applicable.

Real-World Applications of Counterfactual Reasoning in LLMs

Counterfactual reasoning is applied across various domains to enhance decision-making and strategic planning:

- Programmatic Advertising: With the help of LLMs, counterfactual reasoning is used to simulate various scenarios based on user engagement data and optimize the placement of ads accordingly. This allows us to figure out the most efficient methods for reaching the target audience and getting the highest return on investment. A/B testing follows the same approach.

- Risk Assessment in Finance: Through counterfactual simulations, LLMs evaluate the implications of market fluctuations for investment portfolios. Subsequently, this empowers financial analysts to take more effective and insightful risk management decisions.

- Policy Analysis: In public policy, LLMs can simulate the implications of proposed regulations that will predict what may be the effects on the different sectors of the economy, enabling policymakers to create smarter regulatory frameworks.

- Scientific Exploration: Scientists employ LLMs to develop hypotheses and then to test them in simulated environments, which is of paramount importance in the fields where direct experimentation is either impossible or unethical, such as environmental science, or human transgenics.

Challenges and Considerations for Counterfactual Reasoning in LLMs

Evaluating Hypotheses

One of the most challenging parts could be stability checking of computer-generated scenarios, especially when there are numerous interpretations possible.

Avoiding Bias

LLMs' counterfactual scenarios need to be thoroughly checked for biases present in the training data to guarantee that the decision-making process is not compromised.

Technological Enhancements

Strategies like reinforcement learning and human-machine integration are conceived to give rise to improved factual conclusions within larger language models.

Future Outlook of Counterfactual Reasoning in LLMs

Near-Term Practical Implementation

- Personalized Decision Support Systems: LLMs are being designed to provide personalized advice in areas such as financial planning and healthcare by creating individual-specific scenarios and outcomes.

Long-Term Theoretical Potential

- Automated Creative Problem Solving: There is a chance that LLMs by themselves can come up with novel ideas and evaluate them in domains like engineering and design where existing methods may not be powerful enough to cope.

Conclusion: The Future of Counterfactual Reasoning in LLMs

The counterfactual reasoning will be the next big thing that will change the way LLMs will interact with and understand the world, giving them not only the power to predict but also the ability to creatively solve problems. This kind of problem-solving style helps the LLMs think better, and they can come up with detailed and thoughtful solutions, which are the basics of good decision-making.

As the development of AI continues, we can expect more advanced technologies that provide enhanced counterfactual reasoning to LLMs. These systems will be utilized by companies in marketing and public governance, among others. The future of the realism evaluation and enhancement techniques of counterfactual scenarios is going to make this AI capability more practical.

9. Causal Reasoning in Large Language Models (LLMs)

Causal Reasoning in Large Language Models (LLMs)

Causal reasoning is the fundamental cognitive process employed by LLMs for discovering the cause-and-effect connection between different events. This ability to reason through complex tasks is critical in the areas of understanding complex narratives and facilitating scientific reasoning and decision-making.

Historical Context and Development

Causal reasoning is a combination of both philosophy and statistics and has its roots in the early philosophical debates on causality to the sophisticated computational models in artificial intelligence. AI, especially the LLMs, is now shifting from simple rule-based systems to complex models with deep learning and probabilistic reasoning for causal reasoning, which becomes capable of simulating and predicting the outcomes.

Understanding Causal Relationships in LLMs

Challenges and Key Approaches

Causal reasoning in LLMs extends beyond traditional statistical analysis, addressing the why and not just the what:

- Causal Inference Methods: Methods like the potential outcome models that help LLMs to estimate the effect of interventions on outcomes.

- Contrastive Analysis: Offers LLMs the opportunity to compare the actual outcome with the alternative outcomes which could have been possible under different circumstances.

- Temporal Reasoning: Implies to be familiar with the arrangement of the events while the time-related transformations influence the logic of causes and effects.

- Generative Modeling: It is utilized for foreseeing the potential outcomes based on the learned causal relations.

- Probabilistic Programming: This is used for predicting the possible outcomes based on the learned causal relationships.

Integration Within LLM Architecture

These methods are integrated with LLMs by implementing layered approaches where causal models determine the decision-making process. For instance, a model can use probabilistic programming to evaluate the probability of different outcomes and then use temporal reasoning to modify predictions based on time-based data.

Advantages and Limitations of Causal Reasoning in LLMs

Advantages

- Improved Understanding of Complex Systems: It enables the comprehension of the causal mechanisms and dynamics of the systems at deeper levels.

- Enhanced Question Answering and Decision-Making: Enables LLMs to provide answers that require an understanding of causality and to simulate the effects of potential decisions.

Limitations

- Differentiating Correlation from Causation: LLMs should be able to distinguish between true causal relationships and mere correlations which is usually hard because of the complexity of data.

- Handling Confounding Variables: Balancing and accounting for any variables that might impact both the cause and effect is what determines the accuracy of causal interpretation.

Potential Solutions

The main focus of current research is on developing sophisticated algorithms that can provide more accurate inferences of causality, while also considering confounding variables and incorporating more holistic data analysis methods.

Real-World Applications of Causal Reasoning in LLMs

Causal reasoning significantly impacts several sectors by enhancing the analytical capabilities of LLMs:

Market Research

LLMs conduct causal reasoning to examine consumer behavior in great detail, evaluating the main factors that lead to the making of purchase decisions. As a result, marketing strategies can be improved efficiently.

Scientific Discovery

In the domain of scientific research, LLMs apply causal inference to process experimental data, which helps to specify connections between variables that are necessary for the creation of discoveries.

Personalized Medicine

One of the most promising applications is in personalized medicine, where LLMs will analyze patient data to find out the causal factors of health conditions, and thus, they will help in the development of customized treatment plans.

Challenges and Considerations for Causal Reasoning in LLMs

Evaluating Hypotheses and Avoiding Bias

The main difficulties are the validation of the causal hypotheses generated by LLMs and the bias that may come from the training data or the model assumptions.

Technological Enhancements

The development of machine learning algorithms, including reinforcement learning and hybrid models with multiple decision-making techniques, leads to higher levels of accuracy and dependability in causal reasoning.

Future Outlook of Causal Reasoning in LLMs

Potential Developments

The future of causal reasoning in LLMs is vibrant, with potential applications that could revolutionize decision-making processes across industries:

- Personalized Recommendation Systems: Instantaneous and more personalized suggestions would soon become possible for Advanced LLM to use causal reasoning and knowing the main underlying explanation that helps them understand the user preferences.

- Causal AI for Robotics: This may enable robots to predict the consequences of their actions in the complex environments we live in and lead to more autonomous and safer robots.

- Research and Collaborations: A lot of research is being done to improve causal reasoning capabilities, with the collaboration of academic institutions and tech companies focusing on the creation of more complex causal models that can be used in different contexts.

Conclusion: The Future of Causal Reasoning in LLMs

Causal reasoning has a huge potential for the future of LLMs as a basis for models that not only predict but also can understand the internal processes of the systems it describes. With these models becoming more and more complex, their ability to understand and reason about causality will be the key factor in their transformation from mere prediction tools to intelligent systems that can make informed and reasoned decisions.

With the advancement of technology, the combination of causal reasoning with other systems of reasoning such as counterfactual reasoning is likely to lead to more robustness and versatility of LLMs. Ongoing research and collaborative efforts, which are advancing the development of methods that improve the realism, accuracy, and relevance of causal models, are still ongoing.

This capability of LLMs is not only interesting for current applications but also creates new opportunities for future technological innovations. It makes LLMs indispensable tools in our quest to understand complex systems and to make better decisions based on sound causal analysis.

10. Commonsense Approach in LLM Reasoning

The commonsense reasoning in the Large Language Models is the ability to implement everyday knowledge and intuition into its processes, which enables the models to perform tasks that require a real-world understanding. Factual knowledge is, indeed, the outcome of explicit assertions that can be easily verifiable. The commonsense knowledge world, on the other hand, is filled with implicit meanings—the things that people usually unconsciously accept.

Distinguishing Commonsense from Factual Knowledge

Factual knowledge deals with concrete facts that can be directly observed or verified, such as "Paris is the capital of France." On the other hand, commonsense knowledge relates to broader, often implicit insights like "people sleep during the night" or "eating ice cream too quickly can cause a headache. These delineations are especially fundamental, as they bring to light the problems that LLMS struggles within imitating the human-like understanding that is not there with just instruction.

Understanding Commonsense Knowledge

Challenges of Representation and Incorporation

The integration of commonsense knowledge into LLMs is an extremely difficult task due to its intrinsic nature and the vastness of the domain. Illustrating this kind of knowledge in a form that machines can handle and make use of is one of the toughest tasks which needs using many advanced techniques and creative approaches.

Sources of Commonsense Knowledge

Commonsense knowledge for LLMs may come from large text corpora, crowdsourcing initiatives, and knowledge graphs that are structured. These sources of information provide various data points that help in the construction of a broad base of generalized knowledge that LLMs can refer to.

Techniques for Extracting Commonsense Knowledge

Technologies such as natural language processing and machine learning algorithms are used to retrieve usable common-sense knowledge from these data sources. These techniques can be used to turn unstructured data into structured forms that LLMs can easily comprehend and utilize.

How LLMs Leverage Commonsense Reasoning

Integration Techniques

LLMs employ several techniques to integrate commonsense knowledge into their reasoning processes:

- Commonsense Reasoning Modules: These specialized components are specially designed to deal with the tasks that require an understanding of implicit information like the interpretation of the nuances in the conversation or the prediction of the human behavior.

- Commonsense Knowledge Embedding: Here, commonsense knowledge is represented in vector form and LLMs can easily interact with such information while they are conducting their activities.

In-depth Example: Interpreting Sarcasm

Let’s take an example of an LLM that would analyze the following quote: “Great, I Just Love Working Late on Fridays.” Commonsense reasoning allows the LLM to perceive the sarcasm behind this phrase by understanding that the majority of employees do not enjoy working late, especially not on Fridays. This comprehension is based on the model’s architecture, which is a combination of different reasoning strategies and knowledge embeddings to process and interpret those nuances.

Advantages and Limitations of Commonsense Reasoning in LLMs

Advantages

- Enhanced Real-World Task Performance: LLMs utilize commonsense reasoning as part of the list of tasks that implies an understanding of context and implicit information, such as participating in natural conversations or giving context-aware recommendations.

- Safety and Ethics: Through the knowledge of human norms and expectations, LLMs can produce responses that are caring and suitable, thus reducing the chance of generating harmful or biased content.

Limitations

- Complexity of Capturing Commonsense Knowledge: Currently the broad nature of commonsense and its ambiguity makes it hard to model and mimic its functionality.

- Integration Challenges: Consequently, commonsense and formal reasoning approaches suffer technical difficulties because of their inherently different natures and properties.

Real-World Applications of Commonsense Reasoning in LLMs

Commonsense reasoning enhances the utility of LLMs across a variety of applications:

- Smart Assistants: Powered by LLMs, smart assistants make use of commonsense reasoning to understand and respond to user requests in a recipient manner. For example, interpreting that when a user invokes "grabbing a jacket", in his query, it means the weather.

- Autonomous Vehicles: In the automotive industry, LLMs with commonsense reasoning can drive in complex environments more safely by understanding the likely behaviors of pedestrians and other drivers, like slowing down at crosswalks even if the light is green.

- Social Media Analysis: With commonsense reasoning, LLMs get the ability to detect and understand social nuances like sarcasm or offensive content on the internet which don't usually get flagged.

Challenges and Considerations for Commonsense Reasoning in LLMs

Despite its advantages, commonsense reasoning presents several challenges:

- Data and Bias: The real issue is that the commonsense knowledge that is incorporated into LLMs is free from biases and covers a wide range of human experiences. Data quality is a critical factor when it comes to a model's understanding and production of results.

- Generalization and Adaptation: LLMs tend to have difficulties processing new or unknown situations by generalizing commonsense knowledge they have learned. This happens especially when the context is significantly different from their training data.

Future Outlook of Commonsense Reasoning in LLMs

Potential Developments

The future of commonsense reasoning in LLMs is promising, with ongoing research focused on enhancing the depth and breadth of knowledge these models can understand and utilize:

- Personalized Education Systems: Future LLMs could provide educational instructions on students’ behalf with the help of the commonsense reasoning the LLMs are equipped with that helps them know and understand the individual learning styles and needs of each student.

- Enhanced Human-Computer Interaction: Teaching LLMs ways of conversations that are more convincing and efficient by always interpreting human intentions and feelings across many contexts.

Conclusion: The Future of Commonsense Reasoning in LLMs

Commonsense reasoning is the basis for the next generation of LLMs to become truly intelligent systems that can interact with the world in a meaningful and natural way. As this area progresses, the addition of full commonsense knowledge together with AI ethics and bias mitigation will be the much-needed ingredients for increasingly clever and trustworthy LLMs that are in tune with human values.

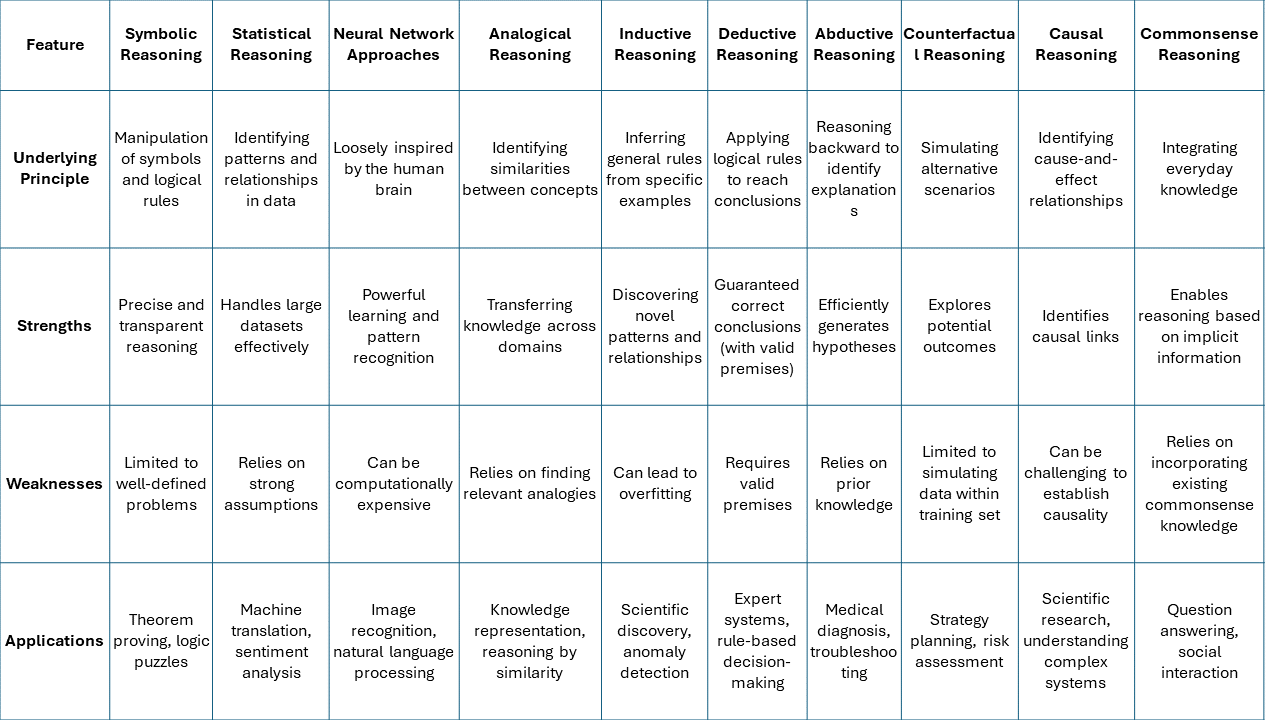

Comparison of LLM Reasoning Approaches

Comparison of LLM Reasoning Approaches

Final thoughts on Reasoning approaches in LLMs

In a nutshell, by becoming proficient in a variety of reasoning strategies used by the Large Language Models, we can enhance our relations with these systems to their better understanding and evolution. Through studying the nitty-gritty of causal, commonsense, and other reasoning areas we accumulate and polish bits of knowledge to fine-tune and direct LLMs. However, this knowledge is also vital in the case of the intended use of current abilities alongside the prospective development of AI systems that better understand their environment and generate more complex cognitive behaviors. For AI enthusiasts and scientists, this represents a compelling call to action: to be able to dive deeper into the mechanics of LLM reasoning to both use and to develop this exceptional technology. With every update and LLM implementation, we get closer to the point where our informed interactions will become a catalyst for advancement. We will improve the capabilities of AI and make it indispensable for complex global problems by turning it into an insightful collaborator from an ordinary automatic assistant.