Google Gemini AI: Everything You Need to Know

The wait is over! Explore the capabilities and features of Google Gemini AI, the latest AI model, including its multimodal design, performance enhancements, pros, and cons. Learn about the different versions and how they can be used for tasks such as translation, coding, and reasoning.

Google just launched its Google Gemini, a highly advanced AI model. The company claims Gemini is the most powerful platform created yet. Worldwide users can access it via Bard, Google Pixel 8 Pro phones, and some developer platforms. It comes in three variants: Ultra, Pro, and Nano to compete with ChatGPT, its biggest rival in the world of GenAI.

Are you interested in learning about Google Gemini and why there’s so much hype about it? Stay with us to learn everything about it.

What is Google Gemini?

Google Gemini is a large language model. It can comprehend information in video, audio, text, and coding formats. Alphabet’s Google DeepMind company, which focuses on AI research and development, created Gemini 1.0 on 6th December, 2023. Its main credit goes to Sergey Brin, Google's co-founder, and other devoted staff for developing Gemini's large language models.

Gemini beat Google's Pathways Language Model (PaLM 2), which was released on 10th May 2023 and became the most advanced LLM set at Google. The company integrated it into many technologies related to generative AI to enhance their performance. If you want to see how Gemini performs, Google Bard (which was pre) is the best place to see its capabilities.

Gemini works on integrating NLP (natural language processing) capabilities that help it understand and process prompts and data. It can also understand images and recognize them by parsing complex visuals like figures and charts. It eliminated the need for external OCR (optical character recognition).

It can even do translational tasks with its multilingual capabilities. For instance, it can summarize information in various languages and do mathematical reasoning. Plus, for a given picture, it can generate several captions in different languages.

Gemini has partial multimodality, meaning its training was done on different data types including audio, visuals (images, videos), and text. This training resulted in making Gemini a cross-model reasoning platform. It can understand diagrams, graphs, handwritten notes, and even challenging questions.

What are the Different Versions of Gemini AI?

Google Gemini AI comes in three sizes namely, Gemini Nano (a lighter version), Gemini Pro (a beefier version), and Gemini Ultra (the most capable LLM version). Here’s their clear description:

Gemini Nano

It can run on smartphones like Google Pixel 8 to suggest replies within chats and summarize texts. It is specialized in doing on-device tasks by eliminating the need for external servers.

Gemini Pro

It runs on Google’s data centers to power their latest chatbot, Bard. It helps in understanding the queries and sending responses faster.

Gemini Ultra

It is under testing and will be released once the testing tenure is over. This model is created to outperform the most complex and challenging tasks. Gemini Ultra crossed human-level intelligence on massive multitask language understanding (MMLU). It was based on 57 subjects including physics, law, maths, history, ethics, and medicine to do reasoning and understand nuance in complex subjects.

What are the Features of Google Gemini?

What can Gemini do? Gemini is a versatile and powerful AI model with multiple features. Some are discussed below.

1. Partial Multimodality

Gemini’s capabilities are not restricted to text only. It is partially multi-model as it can deal with several modalities including:

Text

Read and understand text across various formats like coding, chat logs, articles, and books.

Audio

Identify and analyze language in over 100 languages to understand the emotions and tone of speech. It can also transcribe audio messages. Unfortunately, uploading files directly for transcription within the current Google Gemini interface isn't available. While Gemini can transcribe audio, it's done through its API access, not through a user-friendly upload option. This API access is typically for developers and requires some coding knowledge.

However, there are alternative ways to achieve audio transcription using Gemini's capabilities:

- Cloud-Based Services: Several cloud-based services integrate with Gemini's API, allowing you to upload audio files and get them transcribed. These might involve additional costs depending on the platform.

- Pre-built Tools: There might be pre-built tools available online that utilize Gemini's API for transcription. These tools could have a user interface for uploading audio files, making it easier for non-developers. A quick web search for "Gemini audio transcription tool" might reveal some options.

- Third-Party Transcription Services: Many online services specialize in audio transcription. While they might not directly use Gemini, they offer user-friendly interfaces for uploading audio files and getting transcripts.

Video

Process and explore videos, generate descriptions, summarize key points, and even answer questions regarding videos. There are two ways to understand "Gemini" in the video:

- Gemini for Education (Geminion): This platform allows uploading personal video clips for educational purposes. There is no video generation or manipulation involved. You can upload videos you filmed yourself or from your computer, with a maximum file size of 100 megabytes.

- Gemini Large Language Model: While Gemini can process information from videos through its API, it currently doesn't support directly uploading videos for regular users. However, research suggests it can analyze videos for tasks like summarizing content or understanding the visuals.

Images

Analyze and understand visual content like scenes, objects, images, and the relationship between them

Code

Interpret, understand, and explain generated code in different programming languages including C++, Java, and Python.

2. Reasoning and Explanation

Gemini goes beyond just copying information. It understands complicated concepts, solves complications, and explains its reasoning in a straightforward and instructive way. This makes it especially helpful for the following tasks:

Dealing with Complex Questions

Gemini can evaluate information from several sources and deliver insightful solutions to complicated problems, including justifications for understanding.

Understanding Code

Gemini can examine and debug code, identifying flaws and explaining their significance. This may be quite useful for programmers and developers.

Understanding Scientific Concepts

Gemini simplifies complicated scientific concepts, making it an effective tool for teaching and research.

3. Advanced Information Retrieval

Contextual Understanding

Gemini excels in understanding context and finding appropriate content, even if the question is written differently from the keywords. This makes it perfect for difficult research projects or discovering precise solutions in vast databases.

Factual Reasoning

Gemini can evaluate information from several sources, discover disagreements, and provide the most accurate response. It helps to prevent disinformation and offers users with reliable information.

Tailored Search Results

Gemini offers personalized search results based on previous interactions and preferences. It results in more efficient and relevant information discovery. You don't necessarily have to stick to the one chat for Gemini to tailor your search results. Here's how it might work:

- Login-based personalization: Gemini might consider your login information for a more comprehensive history. This could include past searches, interactions with other Google products, and even browsing activity if you're signed in to your Google account.

- On-device history: Even without logging in, Gemini could track your search history within that specific chat session. This would personalize your results for that particular interaction.

The exact details of how Gemini personalizes search are still under development, but it likely uses a combination of login information and on-device history.

4. Capabilities: Creative and Expressive

Art and Music

Gemini may create unique and visually appealing art and music based on textual cues. It gives up fascinating new avenues for artistic expression and collaboration between humans and AI. No, Gemini itself cannot directly generate mp3 files. However, Gemini can work with mp3 files in two ways:

- Processing Audio Input: Gemini 1.5 Pro specifically can accept audio data, including mp3 format, as input for tasks like summarization or transcription. You would provide the mp3 file and Gemini would analyze it to complete your request.

- Prompting with Audio: When using the Gemini API, you can use an mp3 file along with text prompts to influence the content it generates. This wouldn't directly create an mp3, but the audio can provide context for the text output

Multimodal Storytelling

Gemini can design compelling narratives that use text, graphics, music, and even video. It enables interactive and immersive narrative experiences tailored to different senses and learning methods.

Language Translation and Adaptation

Gemini can translate languages while maintaining the original text's subtleties and purpose. It may also change its linguistic style to meet the intended audience. Hence, making it appropriate for a variety of communication purposes.

5. Technical Expertise

Resource Efficiency

Gemini AI is designed to use computing resources efficiently allowing it to be deployed on a broader range of devices and platforms. It increases its possibilities for integration with numerous applications and services. Including Google Flights, Google Hotels, Google Maps, YouTube, Gmail and Workspace

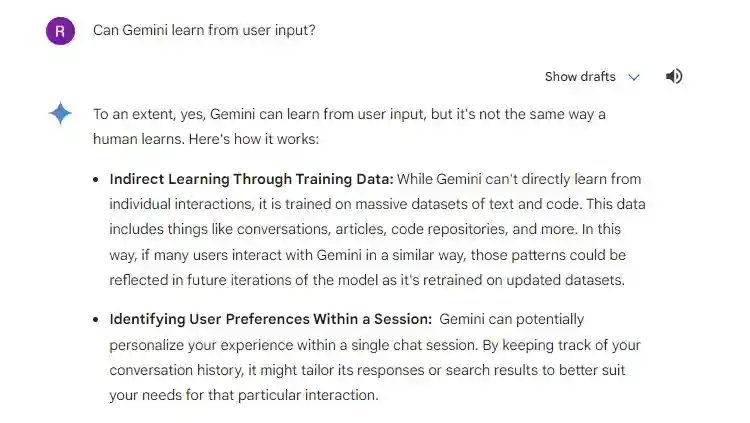

Continuous Learning and Adaption

Gemini is continually learning from fresh data and experiences that increase its performance and capabilities over time. This guarantees that it remains relevant and successful in an ever-changing digital context. Get a better understanding of how Gemini learns from user input.

Explainable AI

Gemini can explain its thinking and decision-making processes. It is critical for establishing confidence and understanding in AI systems. This openness helps users to better grasp how Gemini operates and how it generates its results. While Gemini can share some insights into its decision-making process, its explanations are currently limited. It might highlight relevant keywords in your query or point to sources it used to inform its response. However, it can't delve into the complex statistical calculations happening behind the scenes.

Neural networks, including the ones that power Gemini, are often referred to as "black boxes" because their decision-making process is opaque. They learn complex patterns from massive datasets, but they don't follow a set of logical rules like a traditional computer program.

6. Multimodal Generation

Gemini can create information from several modalities to produce creative outputs, such as:

- Write Tales or Poetry

It combines visual and text prompts to create distinctive and compelling stories. - Produce Video Captions

Captions for videos are automatically generated to appropriately match the visual and aural information.

How to Access Gemini?

Gemini is now available on Google devices that support it in its Nano and Pro versions, including the Pixel 8 phone and the Bard chatbot. Google has gradually integrated Gemini into its various services, including Ads, Chrome, and Search. Developers and corporate consumers accessed Gemini Pro via the Gemini API in Google Cloud Vertex AI and AI Studio on December 13.

What’s the Strategy Behind Google Gemini Working?

- For Google Gemini to work, a substantial corpus of data must be utilized for training.

- After undergoing training, the model employs an assortment of neural network approaches to comprehend data, produce responses to queries, construct text, and generate outputs.

- The Gemini LLMs employ a neural network architecture, constructed entirely of transformer models. Among other data formats, the Gemini architecture has been enhanced to support extended contextual sequences of text, audio, and video.

- Google DeepMind utilized the transformer decoder's efficient attention mechanisms to support the model's processing of long contexts comprising multiple modalities. The training process was enhanced by implementing advanced data filtering techniques.

- An additional approach known as Targeted fine-tuning is used to enhance a model for a specific use case in which numerous Gemini models are implemented for different Google services.

Gemini benefits from the use of Google's latest TPUv5 processors. These are tailored AI accelerators to efficiently train and deploy large models during the training and inference phases.

A significant concern that LLMs encounter is the potential for biased and potentially detrimental information. To enhance the level of safety associated with LLM, Google asserts that Gemini has conducted exhaustive safety testing and mitigation about concerns such as toxicity and bias. To further verify the functionality of Gemini, scholarly benchmarks in the domains of language, image, audio, video, and coding were applied to the models.

Significance of “Training on Data” for Extraordinary Capabilities

Currently, the majority of the data used to train the models of Google, OpenAI, Meta, and other companies comes from the internet's digitized information. However, efforts are being made to significantly broaden the range of data on which AI can operate. For instance, by employing all-time operational cameras, microphones, and additional sensors, it would be feasible for AI to know what’s going on in the real world.

Pros and Cons of Gemini AI

Just like ChatGPT, Gemini AI has its own set of pros and cons:

Pros

- Better Reasoning: Gemini's better reasoning capabilities guarantee nuanced replies, removing the "hallucination" problems that other AIs encounter.

- Multiple Capabilities: The ability to process text, visuals, code, and more expands its range of applications across different industries.

- Resource-Friendliness and Efficiency: In comparison to ChatGPT, its reduced computational demand renders it a more resource-efficient alternative.

Cons

- Early Days: Gemini is still in its early stages and requires time to mature and establish its reputation among users.

- Can't Accepts Documnets: It doesn't accept files directly.

- Unable to Recognize Face Patterns: It's also unable to recognize faces.

- Potential for Misuse: As with any other powerful AI tool, there are legitimate concerns regarding its potential for misuse.

Although there are some drawbacks, the outlook for Gemini appears to be quite positive. As its capabilities continue to evolve, it has the potential to revolutionize the possibilities of AI.

Google's Ultimate Goal for Gemini

When conversing with Pichai and Hassabis, it becomes evident that they view the Gemini launch as the start of a bigger endeavor and a significant leap forward. Gemini is an impressive model representing the culmination of years of hard work and innovation and perhaps should have been developed earlier to keep up with the advancements made by OpenAI.

What are the potential end products and services that will be enabled by Gemini? If Gemini replaces PaLM 2, it will be capable of powering a wide range of Google services and products, including Maps, Docs, Translate, Google Workplace, Cloud environment, software, hardware, and new offerings. Google is dedicated to creating an advanced AI that can comprehend and engage with the world in innovative and extraordinary ways.