Is Artificial Intelligence a Threat to Privacy

When you are using technology like AI, most of the time you are unknowingly or unwillingly revealing your private data like age, location, and preferences, etc. The tracking companies collect your private data, analyze it, and then employ it to customize your online experience.

We are living in an era that is experiencing an information Big Bang where quintillion of data bytes are created daily and the amount of data is getting twofold every two years. Today, we are having remarkable access to virtually any information required; however this access has its cost in the form of our private data; i.e., Data in return for Data. A simple search on the internet seems inoffensive; but before you get the desired information, you are being attacked with many ads offering you what you were searching for. What makes these ads of your interest to appear, and how does it works?

Buy Machine Learning Real-world Case Study in just $49

Buy Data Scientist job interview questions in just $19

Generally speaking, when you are using technology like AI, most of the time you are unknowingly or unwillingly revealing your private data like age, location, and preferences, etc. The tracking companies collect your private data, analyze it, and then employ it to customize your online experience. These tracking companies can also sell your private information data to other entities without your knowledge or consent.

In this blog, we will discuss how AI is getting access to our private data putting our privacy at stake in this so-called modern era, and to what extent users/customers are concerned about their privacy.

AI has Dichotomous nature

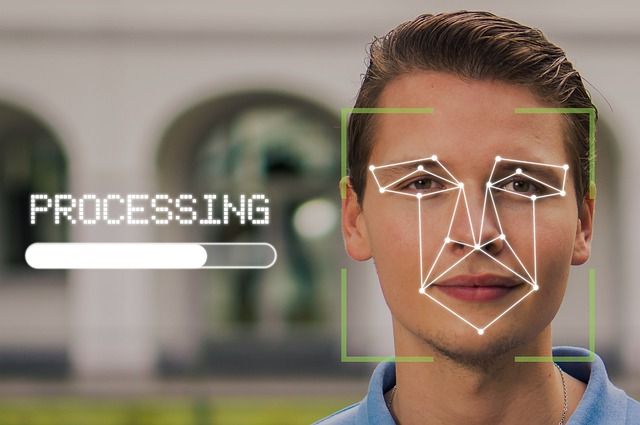

AI is revolutionizing businesses by solving numerous challenges. Businesses need to deal with large volumes of data to boost more powerful, granular, speedy, and accurate analysis. AI approaches like Facial Recognition has numerous benefits in several fields of human life. With the availability of digital photographs through websites, social media, surveillance cameras, etc., facial recognition has developed from vague image identification of cats to a more clear recognition of humans.

However, there is a flip side to these AI advancements. The enormous data that companies feed into AI-driven algorithms are susceptible to data breaches as well. AI may generate personal data that has been created without the permission of the individual. Similarly, facial recognition tool is also invading our privacy. That’s why; many countries like China are asking to ban using facial recognition. California, Oregon, and New Hampshire have sanctioned legislation to ban the use of facial recognition cameras.

Hence, AI is cropping up privacy concerns for consumers/users. We can say that AI is undeniably a great blessing but brings with it a genuine risk: violation of human rights, especially our "Privacy."

Privacy Violation – a concern for consumers/users

Customers have always been focused on their private information. However, at present, organizations are using Big Data Analytics to take some actions that may breach the privacy of their consumers. This privacy breach may even sometimes result in an embarrassing situation for the consumers in front of their loved ones.

About a decade ago, Target developed an AI algorithm to predict if any of its female customers were expecting based on their buying pattern. The company then used to send them coupons at their homes. This sort of predictive action turned out to be problematic especially in a case when a lady was reluctant to tell about her pregnancy to her father, but that mailed coupon resulted in disclosing her personal information.

Digitalization of health information has also come up with growing challenges for the healthcare industry that needs to safeguard vast confidential and sensitive information. PHI (protected health information) of the patients has become extremely valuable these days as patients don’t want to breach their health condition without their permission. NHS Foundation Trust in 2019 provided data on 1.6 million patients to Alphabet’s DeepMind, without taking any permission from the patients to share their private data. Keeping in view the privacy concerns of patients, Google canceled the plan to broadcast chest X-ray scans on account of concerns of patients as they were carrying personally identifiable information. Microsoft removed MS Celeb– a data set containing above 10 million images of 100,000 celebrities after their concerns that they were not even aware of being included.

A survey of above 5000 people from different countries, conducted by Genpact in 2017 revealed that 63% of respondents prefer privacy over the customer experience and want companies to avoid using AI in case it invades their privacy, no matter how delightful customer experience it is delivering. About 71% of the respondents showed apprehensions that AI would be even making their main decisions without even their consent or knowledge.

A serious concern is how and what sort of a consumer’s personal information an AI system can access and how dangerous this privacy violation could be?

How AI gathers our Private Data?

Modern technologies like surveillance cameras, smartphones, and the internet have made our private data collection dramatically easier than ever. In this digital era, it is quite easy to track all interests and activities of the users from their conversation while sitting at home or searching about a product to visiting a restaurant. Besides this unconscious revealing of personal data, there is a type of personal data that we are uploading on social media by ourselves. For instance, we visit a restaurant or a store and take several images of the food or product we like and post them online. Most of this data is transferred to cloud computers that have considerably enhanced the probability to track this personal information.

Let’s have a look at in what ways AI is intruding on our privacy by collecting our private data:

Persistent Surveillance

Persistent surveillance refers to the continuous observation of an individual, a location, or a thing. It is the most used technique in the military and police to get well informed about an enemy or suspect. Today AI is posing the same persistent surveillance in our lives with the help of the following inventions:

Digital Assistants

Persistent surveillance has become a product feature of so-called AI assistants like Google Home and Amazon’s Alexa. What users talk about while sitting at their homes is easily accessible to the parent company through these AI-driven systems that collect audible data from the user's home or any other place. These systems then stream this data to the parent company, which stores the collected data and analyses it. Hence, the parent company can easily observe what their users are talking about, what are they doing or about to do without even their knowledge.

A study conducted by Shields in 2018 revealed that consumers love the digital assistants' features of home security but also have apprehensions of privacy violations caused by these devices.

CitiWatch

Apart from the digital assistants willingly being placed by a person at his homes, AI has also made persistence surveillance possible from the distance with the help of Drone Surveillance. This device used on the battlefield has recently been applied to domestic areas. Baltimore, in 2005, developed CitiWatch - a surveillance system at ground level, carrying above 700 cameras to observe people around the city. Law enforcement officials at Baltimore claim that crimes are being reduced with the help of these programs. Contradictory, privacy advocates argue that such programs are violating their privacy and restricting personal freedom.

Privacy Violation Contracts

Most people don't realize what terms and conditions the contracts of online services are bearing. They just accept the terms and conditions without even reading as the contract is so lengthy and not easy to read. However, its consequences might be serious. Facebook and Google own every message, image, or video being uploaded, and don't hesitate to sell your private data to other companies.

Data de-anonymisation

AI can identify and hence track individuals through various devices either they are at homes, at work, or anywhere else. For instance, the personal data that is anonymized can easily be de-anonymized with the help of AI. Individuals can also be identified and tracked through AI-driven facial recognition.

Inappropriate data masking

In case the data masking is not done accurately, it could reveal the individual whose data has been masked. Organizations need to develop effective procedures and policies for data masking to ensure that consumer privacy is preserved. IBM can help in providing the resources to guide about appropriate data masking techniques.

AI-based Predictions

ML algorithms can easily infer sensitive information from insensitive data. For example, based on a person's typing pattern on the keyboard, AI can predict his emotional states such as anxiety, nervousness, and confidence, etc.

Google, of course, has been very successful in gathering private data. The main factor behind its success is that while searching on the internet to get some information, people most of the time can't hide their interests. Even if someone tries to hide sensitive private issues, he can't search about his interest unless the terms are not entered in the search bar. Nonetheless, our most intimate interests that everyone wants to keep private are not private anymore and are collected online. Political views, sexual orientation, ethnic identity, and overall health can also be predicted from activity logs and several other metrics.

How AI manipulates our private data for non-intended purposes?

No doubt, the private data of users helps AI systems to perform the desired tasks in a better way, but this private data collection is not without associated risks. One of these risks is using the private data of the users for non-intended purposes, about which users don't even know how it will be processed, where it will be used, or even sold. This private data in general is used in programmatic advertisements to make people purchase a product. However, this private data can also be manipulated by AI systems and robots. In 2018, the scandal of Cambridge Analytica revealed how personal data collected through Facebook can help to manipulate elections.

AI Vulnerability to Cyber-attacks

We have discussed above that how AI gathers the required insight from unrestricted access to our private data and manipulates it for non-intended purposes. Besides this data collection, AI algorithms used in an organization are highly vulnerable to cyberattacks as well, hence posing threats to the data reliability of the user. AI increases the possibilities for cybercriminals to have accessibility to our private sensitive information and manipulate it for their interests.

Speech Imitation and Deepfakes

AI can impersonate people and it is a great threat to humans on the planet. In 2016, Adobe presented a system that could replicate the voice of a person after listening to him for about twenty minutes. AI is not stopped at speech imitation; its privacy violation extends to what we call “Deep Fakes” where Artificial Neural Networks are used to manipulate videos.

The videos are being manipulated by exchanging faces and are shared online. This practice is becoming common in spiteful ex-partners who can easily remove the face of an actor from a dirty movie and exchange it with their ex-lover to take revenge.

How Privacy violations can be avoided?

The renowned physicist Stephan Hawking stated, "Unless we learn how to prepare for, and avoid the potential risks, AI could be the worst event in the history of our civilization."

It might not be wrong to say that in this world proliferated with AI advancements and cyber-attacks, privacy violation is a great challenge for businesses, especially those that are customer-oriented, as consumers don't want a delightful experience at the cost of their privacy loss. The growth in AI makes the matter more complicated as the existing privacy practices might not account for AI aptitudes. For instance, according to an article published in AMA Journal of Ethics, the existing practices to de-identify consumer data are unproductive as the ML algorithms can re-identify any record from at least 3 data points. Here, the question is how this privacy violation caused by AI can be reduced or avoided to get the confidence of users/consumers in an organization as well as in this technology.

Governments and businesses of many countries are becoming active to find ways to avoid privacy violation risks of consumers. Let's discuss a few of the ways:

Privacy Regulations and Compliance Management

As businesses are becoming more intrusive with the rise in technology, governments of several countries are leading the charge to pass regulations against consumers' privacy violations. To avoid privacy violations, different laws are to be implemented at local, state, and federal levels. The objective behind these laws is to ensure that privacy is an obligatory concern of compliance management. Several bills addressing privacy and data breaches are passed and many are pending in various US states, and territories. Smart businesses are now overseeing to use of data while complying with privacy regulatory rules.

CCPA by California

The most inclusive bill to avoid privacy violation was signed in 2017 named “California Consumer Privacy Act”. CCPA is striving hard to avoid privacy violations since 2017. It has set some standards to regulate privacy concerns and emphasized companies to take permission from the user before sharing their private data with others.

GDPR by Europe

Europe is highly concerned with the consumers' privacy demands and is setting the bar to avoid privacy violations. Europe's GDPR in 2018 ushered new standards on an individual's right to his private information and hence domed digital privacy expectations to the next level. GDPR works to ensure greater control of consumers over their personal data collection and usage. There are possible fines implied in case of violations. Organizations that violate GDPR have to pay the fine of above Eu20 million or 4% of their global turnover on annual basis. That’s why these privacy rules implemented by the EU are considered seriously. The focus of GDPR is to ensure that the practices and policies of the companies are aligned with the privacy standards. In the EU, a business using the personal data of the customers to offer services, to sell products, or to observe their behavior has to comply with these privacy regulations.

HIPPA Act

Concerned with the safety of patient's health information, the HIPPA act has been passed. This act demands companies to disclose a patient’s health information after seeking authorization from him/her.

However, all these regulations and acts are just at the infancy stage of their mission to ensure consumers' privacy safety, and are not perfect and evolving along with expanding privacy laws. Moreover, at present, compliance and legal teams are manually handling the complications regarding data privacy regulations. This manual process used by businesses to ensure privacy safety is not just laborious but time-intensive as well. For lawyers, there is a technology gap that restricts them to define and handle privacy policy in a resourceful way. A company named "Cape Privacy” offers convenient and advanced privacy techniques to ensure secure data sharing and avoid privacy violations. This company delivers software to integrate into the company's data science and ML infrastructure to enable data privacy more conveniently.

Corporate Board must put efforts

Corporate board members of businesses using AI need to oversee that the company management is ensuring customers’ data safety by following the best possible practices. They must introduce privacy concerns of the customers and privacy violation risk awareness into higher management discussions. Audit committee members can also play their role by emphasizing avoiding security breaches.

Companies must limit themselves

Companies must avoid stockpiling each bit of available data and limit themselves to store just fewer data points. According to Bernier, huge volumes of personal datasets can spearhead huge problems in case of a security breach. Companies must plan routine schedules to scrutinize their in-hand data and make timetables to remove or filter information.

Companies must adopt technical approaches

Generally speaking, AI technologies were not developed keeping privacy in mind. However, Privacy-Preserving ML, a subfield of ML, is among the initial approaches to avoid privacy violation. Moreover, federated learning, homomorphic encryption, and differential privacy are the most emerging and promising techniques to avoid privacy violations.

These emerging techniques might reduce a few of the privacy risks inherited by AI and ML, but these are also at their infant stage with several shortcomings. For instance, differential privacy might lack accuracy on account of injected noise. Homomorphic encryption that involves calculation on encrypted data is slow and demands high-level computation. However, researchers claim that all these techniques although are slow but are spearheading the right direction.

Introducing additional features in AI devices

Keeping in view the privacy concerns, Amazon has placed several features in its devices to restrict their data collection ability. Amazonclaims that if its Alexa device is awakened by calling the name "Alexa", it will restrict the device from having persistent surveillance. However, NSA documents released by Wikileaks reveal that such techniques can be exploited and the devices can again be used as a means to have continuous surveillance.

Protecting our Privacy at the individual level

Though the governments and organizations are striving hard to avoid privacy violations of consumers, still there is a long way to go when we will be quite confident about our private data security. At the individual level, we must be familiar with the measures to protect our privacy to some extent. We have outlined a few steps to minimize AI-based online footprints creating embarrassing situations for us:

- Try to browse through networks that are anonymous like Freenet, I2P, Tor, etc. Such anonymous networks secure end-to-end encryption, hence protecting your data from being violated.

- Choose an open-source web browser like Firefox. This browser is preferable to Chrome as it can be inspected freely for security susceptibilities.

- Use an operating system (OS) that is open-source like Linux distributions, as operating systems of Microsoft and Apple have several backdoors to collect your private data without consent.

- Prefer using android cellphones as they are safer as compared to Apple and Microsoft counterparts. Android cell phones have open-source software which makes them less susceptible to privacy violation; however, few privacy risks are still associated with these phones.

Wrapping Up

AI has made considerable contributions to every aspect of our lives. The gigantic information that we are gathering and analyzing today with the help of AI tools is supporting us to tackle numerous societal ills that were hard to resolve earlier. Unfortunately, just like many other technologies, AI brings with it a fair share of drawbacks as it can harm us in several ways. Our privacy violation is one example of many that reveals how AI can make our lives problematic. In simple words, when AI is used maliciously, it is more detrimental than beneficial. Therefore, it is mandatory to implement it with utmost cautiousness keeping the privacy concerns of the users in mind. By adopting some ways to defend our privacy from exploitation, we can manage to safeguard our privacy from malicious intent.