AI Evolution: The Historic Timeline of AI Milestones

Most folks think Artificial Intelligence (AI) is a novel notion, although it's been around for a long time. We went back in the history and curated a list of all key artificial intelligence breakthroughs that have enabled us to live our current lives.

History of Artificial Intelligence

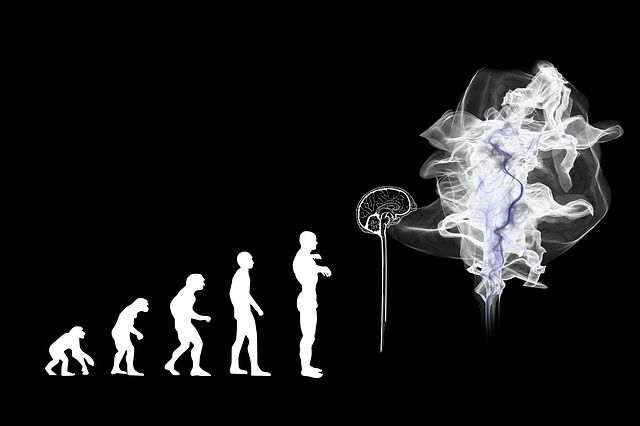

Artificial Intelligence Robots is the first notion that gets popularized due to science fiction throughout the first part of the twentieth century. In early 1950s, scientists, mathematicians, and philosophers had informally adopted the notion of Artificial Intelligence (AI). Computers were so expensive in the mid-twentieth century that only prominent institutions and large technological firms could afford them. Logic Theorist, by Allen Newell, Cliff Shaw, and Herbert Simon, was a program developed by the Research and Development (RAND) Corporation to replicate human problem-solving abilities. It was presented at the Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) organized by John McCarthy and Marvin Minsky in 1956 and is widely regarded as the first artificial intelligence program.

AI grew in popularity from 1957 to 1974. Computers grew quicker, cheaper, and more accessible as they could store more data. Machine learning algorithms developed as well, and individuals became better at determining which method to use for given issue. Breaking through the AI veil presented a pile of challenges. The main problem was a lack of computing capacity to perform anything useful: computers couldn't store or analyze data quickly enough.

Two factors rekindled AI in the 1980s: an extension of the algorithmic toolbox and an increase in funding. “Deep learning” methods were pioneered by John Hopfield and David Rumelhart, which enabled machines to understand via experience. Edward Feigenbaum, on the other side, pioneered expert systems that mirrored the decision-making process of a human expert.

AI flourished. Many of artificial intelligence's key goals were accomplished throughout the 1990s and 2000s. Gary Kasparov, the world chess champion and grand master, was beaten by IBM's Deep Blue, a chess-playing computer program, in 1997.

We currently live in the "big data" era, in which we have the ability to collect massive amounts of data that are too difficult for a single individual to analyze. Artificial intelligence has already shown to be beneficial in a variety of areas, including technology, banking, healthcare and entertainment.

Milestones of Artificial Intelligence

Most folks think artificial intelligence is a novel notion, although it's been around for a long time. There have been numerous key turning points in its history that have aided in the advancement of artificial intelligence abilities. From smart personal assistants to house robots, technology that was once considered science fiction is now a reality.

The technology we have today is the result of a series of artificial intelligence milestones accomplished by a number of unsung heroes working on a number of projects. We went back in the history and curated a list of all key artificial intelligence breakthroughs that have enabled us to live our current lives.

Click here to see the timeline of AI Milestones