Boost Debt Collection and Recovery using Machine Learning [part 3/5]

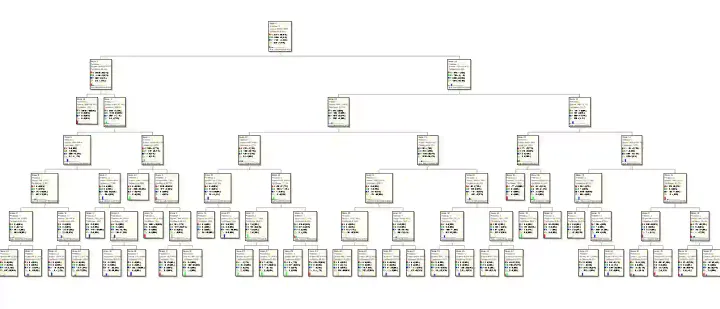

This is part-3 of the Business Use Case on Boost Debt Collections and Recoveries using Machine Learning. Implementation of the predictive model to enhance the current recovery system by creating focus groups for business to boost debt collection.

![Boost Debt Collection and Recovery using Machine Learning [part 3/5]](/content/images/size/w1200/2024/05/Boost-Debt-Recovery.webp)