CPU vs GPU in Machine Learning Algorithms: Which is Better?

Machine learning algorithms are developed and deployed using both CPU and GPU. Both have their own distinct properties, and none can be favored above the other. However, it's critical to understand which one should be utilized based on your needs, such as speed, cost, and power usage.

Machine learning algorithms are developed and deployed using both CPU and GPU. Both have their own distinct properties, and none can be favored above the other. However, it's critical to understand which one should be utilized based on your needs, such as speed, cost, and power usage.

A little about Machine Learning

Machine Learning is the science of making computers intelligent enough to make their decisions. It has been around for a long time, but in recent years it's become powerful enough that it can now be used on problems with many variables and large datasets.

In fact, Machine Learning algorithms are found everywhere today - from Google Search to autonomous cars! We use them automatically every day not only because they work well, but also because we don't have to worry about programming all these tasks by hand and updating our code when something changes. This frees us up so that we can focus on more creative design decisions instead of managing technical details like data storage or algorithm design.

The goal of this blog post is not just to help you understand Machine Learning, but to help you decide which machine learning hardware is right for your needs.

Machine learning algorithms are found in just about every major technology today and they're used extensively across all industries because they work well with big data sets and provide opportunities to extract meaningful insights from these datasets without having human input each time. With that being said, there's no single processor type or algorithm that can address everyone's needs equally. Some companies care more about speed than power consumption while others may be more concerned with cost efficiency. The decision of what kind of machine learning hardware will best suit their purposes depends on how they want it to perform in terms of its computing power, storage space, and availability/costs per usage hour (or minute).

1. Central Processing Unit (CPU) for Machine Learning

Is Central Processing Unit (CPU) still good for machine learning?

Common thought has captured the mind of tech lovers that CPUs are no longer a promising line of defense against data-intensive computation. They seem to be inclined towards more advanced and savvy hardware. But as we say, “age before beauty”, CPUs are still in the race.

AI accelerators are indeed specialized for machine learning applications but CPUs always win the price race, being the cheapest. Not everyone learning or deploying machine learning algorithms can afford AI hardware accelerators. Certain machine learning algorithms prefer CPUs over GPUs.

Generality of CPU

CPUs are called general-purpose processors because they can run almost any type of calculation, making them less efficient and costly concerning power and chip size. The course of CPU performance is Register-ALU-programmed control. CPU keeps the values in a register. A program guides the Arithmetic Logic Unit (ALU) to read a specific register, perform an operation, and take information to output storage. This sequential operation capability is good for linear and complex calculations, but not for simpler and multiple calculations that require parallel computing.

Machine Learning Operations preferred on CPUs

- Recommendation systems for training and inference that involve huge memory for embedding layers.

- Machine learning algorithms that do not require parallel computing, i.e. support vector machine algorithms, time-series data.

- The algorithm that uses sequential data, for example, recurrent neural networks.

- Algorithms involved intensive branching.

- Most data science algorithms deployed on cloud or Backend-as-a-service (BAAS) architectures

We cannot exclude CPU from any machine learning setup because CPU provides a gateway for the data to travel from source to GPU cores. If the CPU is weak and GPU is strong, the user may face a bottleneck on CPU usage. Stronger CPUs promises faster data transfer hence promising faster calculations.

Basics of a Computer processor?

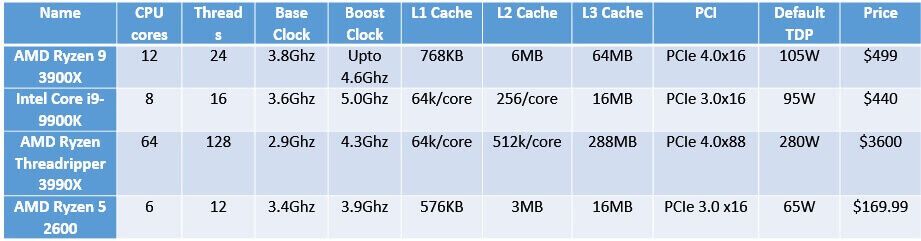

The main features considered while having a computer processor are Clock speed, cache, number of cores, power consumption, and TDP.

Clock Speed

It has been clearly stated that clock speed is not a relevant indicator of performance for today's computer processors. Almost all CPUs have a maximum clock speed of around 3GHz out-of-the-box; however, they are commonly clocked at 1 GHz where power consumption and heat dissipation are concerned.

CPU Cores

Today’s processors generally contain 2 or more physical CPU cores on one single die. These cores are based on the architecture which was introduced by Intel in 2005 as a Pentium 4 processor having Multiple Instruction Multiple Data (MIMD) technology to work through multiple threads simultaneously to handle tasks independently of each other and increasing efficiency per core.

CPU cache

The cache is an important feature of a CPU. It may be called fast disk storage where a computer processor places small amounts of frequently needed data residing in the main memory (RAM). This storage system helps reduce latency and increases processing speed which enhances performance.

A CPU cache is smaller than RAM but faster because it has a lesser distance from the ALU. In most cases, its size ranges between 32KB to 1MB which can hold values from 10 to 100 times more than RAM.

L1 Cache

The first level cache (L1) is physically located near the CPU and directly connected to it.

It always deals with a single core. It is accessed by the computer processor via a cache controller to execute frequently used instructions and store them for later use.

L2 Cache

The second-level cache (L2) is the main storage of a computer processor that holds copies of instructions and data recently accessed by the CPU.

L3 Cache

Most computer processors, especially those for laptops and mobile phones use the L3 or third-level cache which is larger than the second-level cache. It is more expensive to implement but offers higher performance due to its higher bandwidth efficiency.

L4 Cache

Some desktop computers have a fourth-level cache (L4) also called external cache which is even bigger than the L3.

TDP (Thermal Design Power)

This is the power required to run a computer processor. It measures how much power an individual component of your system consumes when it’s at full load. If you want to overclock, this number should be as low as possible so.

CPU Bandwidth

The throughput or the total capacity to handle data on a single pipeline is called bandwidth. Bandwidth may be measured in terms of bits per second (Bps) for serial communications or bytes per second (Bps) for parallel and serial communications.

For example, if we have two processors each with a RAM of 10MB, then 10MB/sec is the bandwidth and if there are two processors with 2 cores each, then the combined RAM of 20MB divided by 2 will give us a bandwidth of 10MB/s.

PCI Express lane

A computer processor may have a different number of lanes which determine the bandwidth and other aspects.

PCI Express (PCIe) is a high-speed bus that uses various numbers of lanes (2, 4, 8, 16) to connect peripherals such as graphics cards, storage drives, and network interface cards. It’s now being adopted in most personal computers for its lower cost and increased bandwidth.

**Be sure to choose PCIe over SATA for faster data transfer.

What are the different types of CPU Cores?

CPU cores may either be single-core, dual-core, or multi-core.

- Singe core – a processor with only one physical core.

- Dual-core – a system runs on two physical cores, which doubles the processing power.

- Multi-core - A multi-core processor has multiple cores (8s in current-gen CPUs) and an independent instruction set architecture (ISA). CPU cores are essentially independent processors contained within a single package.

By considering the above CPU features, now we are capable of choosing the best CPU for machine learning.

Best CPU for machine learning

The king: AMD Ryzen 9 3900X

Runner-Up: Intel Core i9-9900K

Best for Deep learning: AMD Ryzen Threadripper 3990X

The cheapest Deep Learning CPU: AMD Ryzen 5 2600

CPU features you should consider

2. Graphics Processing Unit (GPU) for Machine Learning

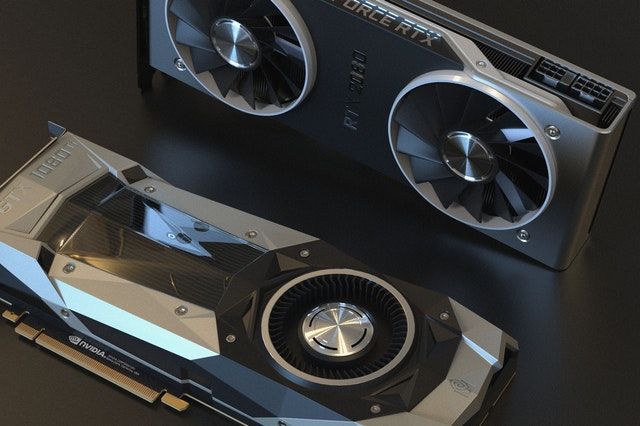

Have you ever bought a graphics card for your PC to play games? That is a GPU. It is a specialized electronic chip built to render the images, by smart allocation of memory, for the quick generation and manipulation of images. This technology was developed for computers to improve their ability to process 3D computer graphics, however, over time it sprung out of this specific area into general-purpose use in massively parallel computing problems where the massively parallel structure of deep learning GPU can provide considerable benefits when applied to large sets of data or problems.

CPU vs GPU

The choice between a CPU and GPU for machine learning depends on your budget, the types of tasks you want to work with, and the size of data. GPUs are most suitable for deep learning training especially if you have large-scale problems.

GPUs are great at computing complex mathematical data like neural networks hence making them perfect tools for machine learning. Advanced graphics cards can also run multiple tasks with a single card such as gaming while working on deep learning training applications simultaneously which improves productivity and save time.

What operations do GPUs perform better for Machine Learning?

GPUs are not best for every machine learning process. They are best at operations that involve parallelism. Hence, they are used for Machine Learning applications that can take advantage of the GPU’s parallel processing abilities like CNN and RNN. Hence, GPUs provide high-speed data processing.

When GPUs are not preferred in Deep Learning?

Despite all the above advantages, it's still not ideal to use GPU for general-purpose computing tasks due to:

- High energy consumption - which is a challenge for power-limited devices such as phones and embedded computers. The cost is also very high when considering the hardware required to support a typical deep learning workstation or cloud setup.

- The type of computation must be well specified to achieve optimal performance since GPUs are generally designed with specific algorithms in mind. It's also difficult to program an algorithm for efficient execution on a GPU (but no doubt there are some ways of achieving this).

- When you intend to train non-parallel (sequential/non-concurrent) algorithms, GPUs are not a good choice. Sequential algorithms are usually executed in a sequence manner and cannot be subdivided into smaller codes. Hash-chaining is an example of such an algorithm that is very difficult to parallelize. In simple words, “P-complete” programs are not meant to use GPUs.

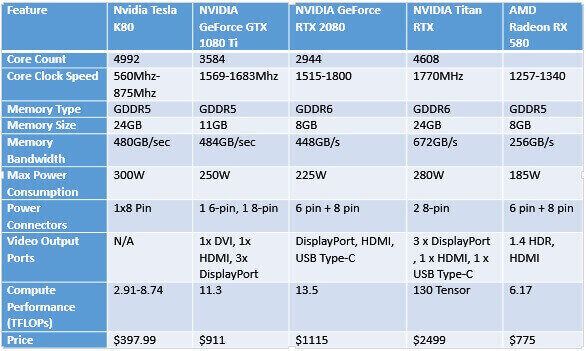

Key Features and Specifications of GPUs

If you’re looking to purchase a graphics card for machine learning, there are a few key features and specifications you should consider:

- GPU Make

- Number of CUDA Cores

- Core/base and Boost clock speed

- RAM Type (GDDR4/5 etc)

- Memory space

- Memory transfer speed (GBps)

- Motherboard Interface

- Power and heat regulation (thermal design)

- Power wires

- Output Ports (HDMI etc)

- API Support

- Computation speed - TFLOPS

What is TFLOPs?

A teraflop (TF) is a trillion floating-point operation per second, and its abbreviation can also stand for either tensor processing unit or TeraFLOPS. It was defined by floating-point operations based on the number of floating-point decimal digits in the answer, e.g., TFLOP = 10 M x FLOPS.

The point of teraflop as a unit is to allow meaningful comparison between the performances of different supercomputers and to allow easier comparison of different floating-point speeds across architectures. The petascale project was launched in 2002 aiming at creating a new standard for measuring computer performance (from one petaFLOP to one exaFLOP).

Finding the Best GPU for Machine Learning

Nvidia is the best GPU brand available for Deep learning. You can get a GTX 1080 or a 2080Ti, both are good choices and should last you for over 3-4 years before they become obsolete compared to other training methods and hardware. For effective AI model, NVIDIA Tensor Core technology, TuringTM uses multi-precision computation. Turing Tensor Cores offer more accuracy for deep learning training and validation, ranging from FP32 to FP16 to INT8, as well as INT4, allowing for significant improvements in performance over NVIDIA PascalTM GPUs. The most common deep learning framework for creating and training neural networks is NVIDIA GPU deep learning, available on NVIDIA GPU Cloud (NGC).

AMD is still years behind in terms of performance metrics when compared to Nvidia, although it does come at a cheaper price. You can this AMD GPU for deep learning pretty much identical to an Nvidia GTX1060 or 1070.

The Lavish GPU for machine learning is the NVIDIA Titan RTX but costs around $3,000 making it an ideal choice only for people with deep pockets. Realistically, you'll be hard-pressed to find a graphics card that outperforms the rest of the GPUs on this list for anywhere near less than $1000.

AMD Radeon RX 580provides good performance at a reasonable price which makes it one of the most popular cards in terms of performance and cost. It also comes with high bandwidth memory (HBM2) technology which allows for significantly higher memory transfer speeds compared to GDDR5 GPUs like its predecessor by up to 72%. The AMD Radeon RX 580 will max out your games at 1080p and 1440p resolutions with ~60 FPS. If you're looking for a mid-range GPU, the RX 580 is your best bet with an optimized price/performance ratio.

Radeon 7 is the newest addition to AMD's Radeon family of graphics cards that is fast and affordable. It comes with 8GB of HBM memory which has a staggering 2TB/s bandwidth (everyday GPUs have up to 512GB/s bandwidth). Additionally, it features 7nm (nanometer) technology which allows for increased power efficiency along with 40 compute units that deliver 13 TeraFLOPS of performance in FP32 & FP16 computation.

AMD also introduced a new cooling solution known as a rapid-pulse inductive coupling which shortens the length of the heat pipes inside by more than half which reduces heat and noise emitted by the card.

The Radeon VII can max out your games at 1440p & 4K resolutions with ~60 FPS on a variety of titles such as Far Cry New Dawn, Monster Hunter World, Anthem, etc. The main downside is that it's expensive compared to other graphics cards on this list hence making it less attractive to most people.