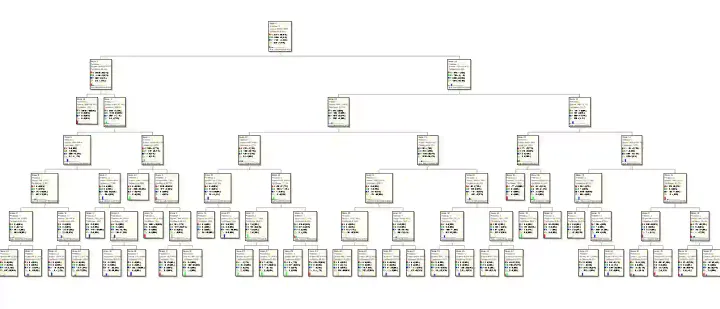

Predict Customer Attrition in Fintech using AI [part 4/5]

This is the fourth article of the series on Predicting Customer Churn using Machine Learning and AI. In this article, we will look at the Modeling phase. We will also get some basic understanding of Machine learning and Predictive modeling.

![Predict Customer Attrition in Fintech using AI [part 4/5]](/content/images/size/w1200/2020/01/modeling.JPG)