Emotional AI: How AI Senses Emotions?

The potential of AI systems to generate emotional connections is critical in making robots more human-like — even if only on a basic level — because it allows them to comprehend not just actions but also the thoughts and feelings that go along with them (which requires having some form of memory).

It is safe to say that artificial intelligence (AI) has become a major topic of discussion in the 21st century. As machines grow more advanced, many people have started to wonder what will happen when they eventually surpass human intelligence. Some think it is inevitable, while others hope for an AI apocalypse -- where robots take over and destroy humanity as we know it. If you are one of those who believe that AI can never reach this level of intelligence or complexity, then read on! We explore how machines might be able to embrace memories and feelings with examples from movies such as Blade Runner 2049.

What are Emotions and Empathy?

Emotions can be defined as our internal state with regards to some object or event, while empathy is when one understands how somebody else feels about something by placing themselves within their shoes. Empathy can be defined as "an understanding of other's emotions" while it is also the ability to share in another person's feelings.

The idea that robots should have some sort of analog to human emotion has recently become more popular among AI researchers, especially after Google DeepMind created an algorithm capable of learning how humans construct symbols (and thus memories). As Dr. David Gunning explains it, "they used what we call a symbol-referent learner. They learn that an arrow pointing left means you would move your joystick to the left and they did this by observing humans doing things like playing Atari video games."

The ability for AI algorithms to make these sort of connections is extremely important in making robots more human-like -- even if only at a basic level -- as it will allow them to understand not just actions but also thoughts and feelings surrounding those actions (which requires having some form of memory). Researchers modeled this type of behavior using neural networks on deep learning systems such as Google Brain or IBM Watson.

It seems like all signs point towards machines developing memories so that they can have emotions and empathy. The question of whether or not machines will be able to reach the same level as humans is still up for debate, but we can say with certainty that they are already capable of emulating these capabilities in their way!

Have Robots Ever Shown Emotions?

This is something that scientists are interested in because it can be helpful to know how robots will react when they are faced with certain situations. Some researchers have designed a robot that was programmed to express happiness or sadness by using its body (Buddy The Emotional Robot). The research team concluded that this robot did not show emotions accurately based on what it had learned about human behavior and the expressions of joy or sorrow displayed by people who were watching videos together with the robot. On one hand, some participants said that although there was no evidence of emotion being shown, feelings similar to empathy were evoked while others felt nothing at all even though the reactions from their partners seemed real enough. This study demonstrated once again just how difficult it is for humans to communicate clearly through facial expression so it is understandable that robots have problems too.

How Do Humans Express their Emotions?

Humans need to communicate with each other more effectively so that they can understand one another better. We often look at someone's face or listen carefully when they are telling us something important which shows just how much we rely on these subtle ways of communication together with what has been said verbally. This means that it will be very difficult for robots ever to mimic human behavior because there are many differences between them even though some researchers continue trying out different approaches based on whatever technology is available at any given time. As a result, both scientists and members of the public who would like robots to possess the ability to express emotions are only likely to be disappointed.

The good news is that it might not be necessary for robots ever to display feelings in this way because they could instead become valued members of society by helping us with important tasks or providing companionship. There will always be a place for humans even though we should also embrace whatever technology has to offer which might help make life easier and more enjoyable. For example, some people feel uncomfortable speaking on the phone but can communicate easily through email while others enjoy meeting new people so much that they would never consider making friends online. It's all about finding out what works best for each person.

How Can We Record Human Emotions on a Computer?

A human face can reveal a lot of information about what someone is thinking or feeling, but that's not the case with robots. Researchers have found it difficult to design "emotion recognition" software that accurately captures subtle changes in facial expressions and voice tones because there are many different ways people express themselves non-verbally as well as verbally. For example, some cultures might be more open than others when showing their emotions so we would expect this to influence how they communicate through technology such as text messages for instance. In addition, if two people know each other very well then even small differences between them will no doubt affect whether or not one person realizes that something is wrong or unusual at all. On the plus side, however, this difficulty could become less important as we become more used to technology and apps like Facebook which allow us to show our feelings with a single click of a button.

How Can We Achieve Artificial Empathy?

We will achieve artificial empathy by creating a robot that can sense and respond to human emotions. The first step of building an empathetic, emotional machine is understanding what constitutes emotion in humans. What are the necessary components for this? We need to understand how our minds work with different stimuli or sensory inputs, such as smells, touch sensations, etc. Some people might not realize it but some types of feelings are very similar to physical pain. For example, if you’re burned your body reacts similarly whether the injury was caused by fire on ice-cold water because both cause cells damage which sends electrical impulses through nerves all leading back up towards your brain. This makes us feel like we have been physically hurt even though there has been no physical contact.

To create emotions in robots, we have to give them more than five senses. But not just any senses. We have to give them the right ones. For example, we cannot give them a sense of taste because this would not help us to understand their emotions. The very first approach would be to make the machine read our facial expressions. This is how it works: the robot would detect facial expressions using computer vision, run an algorithm that recognizes faces and extracts information about them (eg. anger), read our body language to understand if this emotion is felt by us or not.

How can we create emotions? We could enhance certain parts of robots’ processing power so they can make decisions more quickly; however, there will always be a delay before reactions occur because computers process data slower than humans do. This also introduces many challenges like understanding what type of response should be made in which situation as well as making sure that responses are realistic enough for people who work with these machines daily. For example, sometimes your relationship with someone might require you to hug them for a longer period than you would with others. This might not be taken into account by the robot and could lead to misunderstandings or strange reactions from people who work closely with it.

How do we make them more empathetic? We need to give machines the ability to understand emotions so they can better empathize with us. For example, if someone close to us died, we feel sad and cry because these are natural human responses when exposed to such events in our lives; however, robots cannot accurately read emotional signals without understanding how humans process information emotionally first which is why this area of study has evolved so rapidly over recent years as engineers have started taking “emotion” more seriously now that computers have become increasingly popular

How a Machine Can Read Our Facial Expressions?

The recognition of facial expressions is crucial for everyday social interaction. Successful social interactions are based on rapid and accurate detection of the emotional state of other individuals, followed by mirroring or empathy processes to share that emotion. The automation of these processes could provide a foundation for affective computing, allowing computers to infer human emotions through the interpretation of facial expressions. The main challenge in recognizing facial expressions is their diversity; there are hundreds if not thousands of possible facial expressions with distinct movements across different regions; however, only around six core emotions (Happiness, Surprise, Anger, Sadness, Disgust, and Fear) have universal recognition. Previous work has addressed this problem using classifier strained on data from limited sets of individuals and facial expressions, resulting in systems with restricted performance.

To address this challenge, a large dataset of face videos and manually annotated emotion labels for diverse facial expressions (up to 400 frames per subject) was presented. This was supplemented by novel data augmentation techniques that expand the size of the training set without the need for any labeled data. A new convolutional neural network architecture was then used to train end-to-end recognition systems that combined both local information as well as information from different regions across the whole face. Researchers showed state-of-the-art results on benchmark datasets and achieve excellent performance on cross-datasets, outperforming previous methods.

How Facial Expressions Can Confuse a Machine?

Nowadays, machines are considered an effective tool for the development of many features. Even though scientists try to overcome points that cannot be solved by computers or robots till now, some limitations seem to be frustrating them. One of the limitations is called the "the uncanny valley effect", where machines have a hard time distinguishing between different facial expressions on humans. It is also known as the 'Uncanny Valley' hypothesis due to its relation with robotics and animation. Machine learning has been improved for some years now but still, it can't cope with highly realistic effects on human faces because it can't understand some important characteristics which are determinants in facial expressions.

When some people see who look almost real they feel eeriness instead of being amazed. It is because an animated or robotic face that has some human features doesn't show the same reactions as a real human person does. The term was first used in 1970 by Umito Mori who worked on robotics, but only recently the negative points regarding virtual characters have been highlighted. There are still many other factors that can influence machines to be unable to perfect facial recognitions such as skin color and lighting conditions.

They made a study with different images that included humans and robots based on whether they smiled or expressed anger through their faces and the machine became confused when it was given both signals at once (i.e. smiling angry people). The main reason for this dilemma is that even though the machine has the capability of processing facial expressions, it doesn't have enough memory to identify them.

The goal is for machines not to have this kind of problem anymore but many researchers are still working on these new capabilities which can lead to a more efficient development in this field.

Machine learning tries to imitate the way humans learn new things instead of programming everything. It uses algorithms that can change according to different inputs so it can avoid being trapped by some predetermined details and solve issues at its own pace. Even though researchers are trying hard, some people think that robots should be given specific emotions instead of meeting humans' expectations with their software since they don't understand human beings yet. After all, machines are only tools that need some improvements to be able to interact with humans more naturally.

What factors determine the complexity of human emotions? How can computers use these factors to create artificial emotions?

As stated by Kyle and Mark before, Artificial Intelligence (AI) is the study of building machines that work and react like humans. This means that AI researchers try to make their machines as close as possible to human emotions. However, simply telling a computer what we feel is not enough; it needs to understand our feelings as well as perceive them. The use of such technology can be beneficial for those who experience difficulty with social interactions such as autistics or those with mental disorders. A good example of this is Emma, a chatbot developed by MIT Media Lab which aims at helping people suffering from depression.

One way to have computers detect human emotions would be through physiological sensors. Sensors are devices meant to measure physical quantities such as temperature, pressure, or speed. For the detection of emotions in humans, sensors are used in phones and other digital devices to assess how people feel when in contact with them. The Microsoft Band, for example, uses a combination of heart rate, perspiration, and skin temperature to gather data about your emotional state which it displays on its screen. Another way could be through physiological measurements such as Electroencephalography (EEG), Functional Magnetic Resonance Imaging (fMRI), or Positron Emission Tomography (PET).

Emotional Intelligence (EQ) is also used to describe someone's ability to understand human emotions. EQ can be defined as the capacity for recognizing our feelings and those of others, for motivating ourselves, and for managing emotions well in ourselves and our relationships with other people. EQ is considered a crucial element for successful human interaction, as well as a key factor to achieve successful adaptation to a constantly changing environment.

Researchers have been trying to use EQ as a way of distinguishing between the emotions experienced by humans from those expressed by computers. This can be done through an observational study or even by asking users their preferences when presented with different types of emotional expressions. Many studies focus on how complex human emotions are and how they work.

Despite all these efforts, researchers still have difficulties making AI machines that can successfully mimic artificial emotions. First off, although we might know what feelings we have, expressing them is another thing altogether. For example, it is easy to say that we are happy, but there are many ways in which we could express it; this creates a huge challenge for computers as they need to understand what type of happiness people experience and how to replicate it. Moreover, detecting human emotions is not as simple as some might think.

Although facial expressions and physical signs such as perspiration and an increased heart rate can be used by machines to detect our feelings, they can also give them false information. For example, if someone is nervous before giving an important talk, his or her perspiration levels will increase. This does not necessarily mean the person is afraid of failing the presentation.

Can Social Media Help Us Better Understand How Humans Feel?

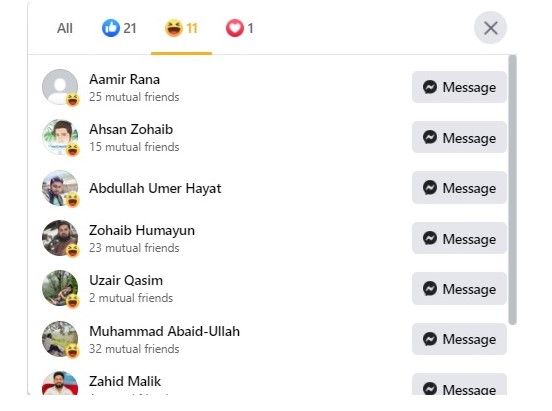

Yes! It has been done already. Consider the picture below

Here is the reaction of the public towards this post

This post is related to molecular Biology, but the response of the public is showing that this was some kind of joke. Now, by reading the response, AI behind Facebook understands that this was a joke. But what kind of joke it was? Based on the population demographics, no females responded to that joke but only the males. So the machine will understand that this was either a Male-related joke or something offensive to females. Since no female responded in anger, AI should conclude that this was male-oriented joke/sarcasm.

Previously, there were limited reactions to a post offered by Facebook. Now there are seven different reactions to a post including like, including love, care, laugh, wow, sad, and anger. Since Facebook is a social platform, it will include emotions. But you will not see such emojis on LinkedIn as it is a professional platform that does not appreciate emotional aspects. By considering the above arguments, we are sure that platforms are already recording our emotions and rationales, and soon they will be incorporated into robotic memory to better mimic human emotions.

In the end, if researchers are to be successful in creating emotional robots, they have to incorporate major areas of AI including Machine Vision (to record facial expressions), NLP (reading the text of an emotionally disturbed person), speech recognition (to hear the pitch, layering of voice to determine emotional state), and different sensors we have discussed above.